No great technology ideas or insights this month – just a brief message to tell you that we’ve re-designed our ICT publishing portfolio under the Digitalisation World title. We’ve launched a new look website, where blogs, podcasts (DigiTalk) and video content will be very much to the fore. We’ve re-styled our weekly newsletters, under the name of Digital Digests, so that each week subscribers will receive more of a mini-magazine – with articles, video and audio content alongside the more traditional text-based stories. And, the DW magazine is starting to evolve along similar lines, so while articles still dominate at the moment, we’ll be making increasing use of podcasts and video content over the coming months.

As with most industry sectors, nobody quite knows how or when traditional ideas and technologies will be replaced by the digital world, but it makes sense to start to move towards this objective sooner rather than later. IP Expo was our first foray into the video interview arena, and it seemed to work well. The results will be appearing on the website and in the newsletters/magazines over time, with more planned in the coming weeks.

Do let us know if you have enjoyed our new look publications, and if podcasts and videos are easier to digest than text-based content.

In the meantime, please do enjoy this issue of Digitalisation World, and I hope that you find the extensive blockchain coverage helpful in terms of understanding the potential it does (or doesn’t) offer your organisation.

Philip Alsop

Global spending on cognitive and artificial intelligence (AI) systems is forecast to continue its trajectory of robust growth as businesses invest in projects that utilize cognitive/AI software capabilities. According to a new update to the International Data Corporation (IDC) Worldwide Semiannual Cognitive Artificial Intelligence Systems Spending Guide, spending on cognitive and AI systems will reach $77.6 billion in 2022, more than three times the $24.0 billion forecast for 2018. The compound annual growth rate (CAGR) for the 2017-2022 forecast period will be 37.3%.

"The market for AI continues to grow at a rapid pace," said David Schubmehl, research director, Cognitive/Artificial Intelligence Systems at IDC. "Vendors looking to take advantage of AI, deep learning and machine learning need to move quickly to gain a foothold in this emergent market. IDC is already seeing that organizations using these technologies to drive innovation are benefitting in terms of revenue, profit, and overall leadership in their respective industries and segments."

Software will be both the largest and fastest growing technology category throughout the forecast, representing around 40% of all cognitive/AI spending with a five-year CAGR of 43.1%. Two areas of focus for these investments are conversational AI applications (e.g., personal assistants and chatbots) and deep learning and machine learning applications (employed in a wide range of use cases). Hardware (servers and storage) will be the second largest area of spending until late in the forecast, when it will be overtaken by spending on related IT and business services. Both categories will experience strong growth over the forecast (30.6% and 36.4% CAGRs, respectively) despite growing slower than the overall market.

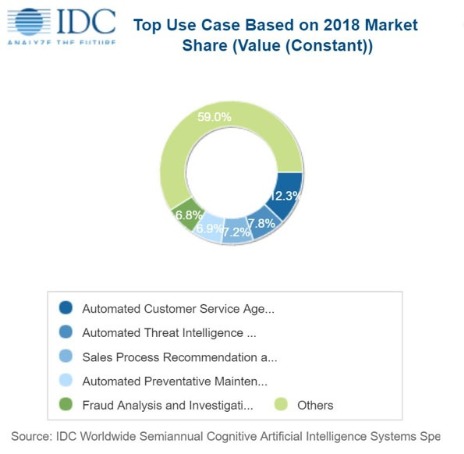

The cognitive/AI use cases that will see the largest spending totals in 2018 are automated customer service agents ($2.9 billion), automated threat intelligence and prevention systems ($1.9 billion), sales process recommendation and automation ($1.7 billion) and automated preventive maintenance ($1.7 billion). The use cases that will see the fastest investment growth over the 2017-2022 forecast are pharmaceutical research and discovery (46.8% CAGR), expert shopping advisors & product recommendations (46.5% CAGR), digital assistants for enterprise knowledge workers (45.1% CAGR), and intelligent processing automation (43.6% CAGR).

"Worldwide Cognitive/Artificial Intelligence Systems spend has moved beyond the early adopters to mainstream industry-wide use case implementation," said Marianne Daquila, research manager Customer Insights & Analysis at IDC. "Early adopters in banking, retail and manufacturing have successfully leveraged cognitive/AI systems as part of their digital transformation strategies. These strategies have helped companies personalize their relationship with customers, thwart fraudulent losses, and keep factories running. Increasingly, we are seeing more local governments keeping people safe with cognitive/AI systems. There is no doubt that the predicted double-digit year-over-year growth will be driven by even more decision makers, across all industries, who do not want to be left behind."

Banking and retail will be the two industries making the largest investments in cognitive/AI systems in 2018 with each industry expected to spend more than $4.0 billion this year. Banking will devote more than half of its spending to automated threat intelligence and prevention systems and fraud analysis and investigation while retail will focus on automated customer service agents and expert shopping advisors & product recommendations. Beyond banking and retail, discrete manufacturing, healthcare providers, and process manufacturing will also make considerable investments in cognitive/AI systems this year. The industries that are expected to experience the fastest growth on cognitive/AI spending are personal and consumer services (44.5% CAGR) and federal/central government (43.5% CAGR). Retail will move into the top position by the end of the forecast with a five-year CAGR of 40.7%.

On a geographic basis, the United States will deliver more than 60% of all spending on cognitive/AI systems throughout the forecast, led by the retail and banking industries. Western Europe will be the second largest region, led by banking and retail. China will be the third largest region for cognitive/AI spending with several industries, including state/local government, vying for the top position. The strongest spending growth over the five-year forecast will be in Japan (62.4% CAGR) and Asia/Pacific (excluding Japan and China) (52.3% CAGR). China will also experience strong spending growth throughout the forecast (43.8% CAGR).

Capita and Citrix survey reveals cost, security concerns, and legacy technology as biggest barriers to workspace agility.

The vast majority (84%) of organisations believe an inability to quickly roll-out new services and applications to their workforce is impacting their ability to stay ahead of the competition, according to new research from Capita and Citrix. The survey of 200 CIOs and senior IT decision makers reveals that cost (48%), security and compliance concerns (47%), and legacy technology (44%) are the biggest barrier towards creating the agile workspaces that today's organisations strive for.

The overwhelming majority (93%) of organisations say that younger employees are driving demand for more flexible technology and ways of working. Furthermore, 91% believed the IT user experience was important in attracting and retaining new talent, with 55% of mid-size organisations stating it was 'very important' underlining the value of retaining talented employees for smaller enterprises. However, despite demand from employees, 88% of organisations also admitted that dated capex budgeting models were making it more difficult for them to create agile workspaces.

"The digital revolution has had a substantial impact on how we work and what our expectations of our working environments are. As organisations look to move away from a traditional desktop IT environment to a more flexible one that caters for mobile, remote, and more digital-savvy employees, the appeal of an agile workspace has grown," said James Bunce, Director, Capita IT Services. "The nirvana for many organisations is creating an agile workspace that puts users first giving them everything they need, to work productively, in one single place from applications, to shared data and documents, to self-service support. However, stitching these facets together is not an easy task, particularly as IT environments have grown in complexity. As the research shows, an agile workspace is not just a luxury for organisations but is fundamental to their success and ability to compete."

Bring your own device or bring your own problem?

As organisations look to create a more user-centric workspace, increasing numbers are turning toward a 'Bring Your Own Device' (BYOD) approach:

On average, respondents to the survey think the number of IT support requests due to remote and mobile working has increased by a quarter (25%). Further, as 'shadow IT' has continued its rise, 83% of organisations admitted it is a challenge to keep their remote and mobile working policy up to date.

Is help at hand?

A key factor in user-centricity is assessing how employees feel about their working environment. The research reveals that more than three-quarters (79%) of organisations are currently measuring the IT user experience. On average, organisations measure the IT user experience six times a year, but more than a quarter (28%) say they are only monitoring it once or twice a year.

In recent years, the ability to 'self-serve' has played a central role in improving the user experience, and IT support is no exception.

However, the survey reveals there is still work to be done in ensuring that self-service technology upgrades the user experience, as the majority of organisations (83%) say they still typically find out about users' experience of IT through calls to the helpdesk.

"The findings show that while many organisations have adopted BYOD the full benefits are yet to be realised, as security and support concerns remain. A reluctance to give staff access to their preferred technologies holds back enterprises from becoming truly user-centric, therefore organisations must seek out solutions the enable BYOD in a secure manner while minimising the support burden," added Bunce. "A truly agile workspace should allow IT teams to continually monitor user experience, yet for many it remains a reactive rather than a proactive function, with the onus on users to report issues. Without tools in place to monitor environments in real-time and enable IT teams to rectify issues more quickly, organisations risk creating a dispersed workforce of unhappy employees."

The legacy problem

From a technology perspective, the majority of organisations (87%) say legacy applications are slowing their journey to creating an agile workspace, with the cost of re-architecting or transforming applications (68%), disruption to the user experience (43%), and a lack of in-house skills to modernise applications (36%) cited as the main causes.

Evolving alongside this application challenge has been the shift towards cloud computing, with organisations looking to Software-as-a-Service (SaaS) applications to increase workspace agility. Yet only a quarter (25%) of organisations think SaaS applications meet their requirements, with this figure dropping to 17% in mid-size organisations.

"An agile workspace is one in which employees can access all the applications and data they need, empowering them to collaborate with colleagues over any connection. This is easier said than done for most organisations because migrating from legacy remains a challenge," said Justin Sutton-Parker, Director, Partners, Northern Europe at Citrix. "Ultimately, businesses are looking for cost-conscious, scalable and flexible solutions to enable productivity and deliver efficiency, often combined with a cloud-first approach to help tackle legacy issues and embrace the digital workspace."

Calls for international regulation and upskilling increase.

Leading figures from business, industry, academia and policy have stressed the urgent need for greater global collaboration and transparency, as international governments and institutions adapt to the proliferation of AI technologies across all sectors.

Gathering in London for The Economist Events Innovation Summit 2018, industry leaders discussed the need for ethical, political and economic regulation as AI continues to shape our society and the everyday lives of millions of people. Facing challenges in public trust and fears over employment security - coupled with current global issues in disease, inequality, climate change and political instability - the need for responsible AI advancement was universally advocated.

During the keynote panel discussion on AI's impact on the employment landscape, Heath P. Terry, MD of Goldman Sachs, stated, "We need to reassure the public that AI is not going to replace humans, but work in tandem with us and handle the mundane parts of our jobs. It is a sort of advisor that sits alongside us, freeing us up to perform more difficult tasks."

The following panel went on to discuss the implications of AI and humans working together in this way, with the technology becoming ever more sophisticated. Morag Watson, Vice President and Chief Digital Innovation Officer at BP, explained, "AI will revolutionise the future employment market, creating jobs we won't recognise and can't even conceive of today. This new breed of job risks creating its own form of skills shortage, with employees requiring retraining and upskilling."

Other key themes explored throughout the day included the risks and ethics of AI development, with speakers recognising the potential for AI to be exploited by bad actors and to perpetuate society's existing biases.

Allan Dafoe, Director of GovAI, Future of Humanity Institute at University of Oxford, explained the enormous opportunity we are now being presented with, "You can't trace decisions made by a human brain. But with AI, you can audit every single line of code, offering an opportunity to identify and remove bias by following and understanding the entire decision-making process."

Jo Swinson, MP for East Dunbartonshire and Deputy Leader of the Liberal Democrats, suggested tangible action to commit the industry to ethical standards, "We should consider a form of Hippocratic Oath for those working in AI a 'Lovelace Oath' outlining a set of ethics to abide by, which will become an integral part of being a programmer or data scientist. Although we subcontract our judgement to AI, it's still just lines of code, and we need to maintain a level of human accountability for the decisions a machine might make."

Concluding speaker Demis Hassabis, co-founder and chief executive of DeepMind, spoke openly about his global vision for AI, "I'd be pessimistic about the world if something like AI were not coming down the road. We're not making enough progress on the major challenges facing society - whether that's eradicating disease or poverty or fighting for greater equality - so how will we address them? Either through an exponential improvement in human behaviour, or an exponential improvement in technology. Looking around at politics and recent events, it seems that exponential improvement in human behaviour is unlikely. So, we're looking for a quantum leap in technology like AI".

One of the moderators, Vijay Vaitheeswaran, US Business Editor, of The Economist, summarised the summit's general consensus:

"The connection between the global challenges we face such as water and food shortages and AI means that we now have new powerful tools to solve these problems. The history of innovation has never taken place in a straight line but the idea that AI can be applied diligently and with purpose to tackle intractable problems should give us all hope for the future."

Companies in Europe are enhancing always-on, omnichannel customer service as more and more consumers embrace AI-driven experiences.

Companies across Europe are deploying artificial intelligence (AI) technologies to revolutionise customer service as more and more consumers show high acceptance of AI-driven experiences, reveals a new research report from ServiceNowand Devoteam.

The report, "The AI revolution: creating a new customer service paradigm", explores how AI is driving a new revolution in service delivery, drawing on research* carried out with 770 IT professionals responsible for the customer service function in 10 European countries.

It reveals that nearly a third (30%) of European organisations have introduced artificial intelligence (AI) technologies to customer service and 72 per cent of those are already seeing benefits that include freeing up agents' time, more efficient processing of high-volume tasks and providing always-on customer support.

"The majority of organisations are offering omnichannel experiences to customers, but many are struggling to keep up with increasing consumer demand for service across these channels," said Paul Hardy, Chief Innovation Officer EMEA, ServiceNow. "Early adopters are reaping the benefits of using AI technologies to deal with common tasks and requests, freeing agents to shift away from a reactive role to really driving proactive, meaningful engagement."

Customer service teams in Europe struggle to keep pace with customer demand

According to survey respondents, providing service and support 24/7 is their number one customer service challenge. Customers are being offered multiple service channels, but they expect responses at any time of the day and this is pushing organisations to breaking point:

AI will reinvent customer engagement

AI will allow organisations to move beyond handling more queries more efficiently, to anticipating and acting on customer needs:

"We are only at the beginning of the AI-driven customer service revolution," said Debbie Elder, Principal Consultant, Devoteam. "A powerful development is the ability of AI to help transform high-stress moments into positive experiences for customers that build loyalty. For example, in the case of a flight cancellation, AI can detect the customer starting a live chat and indicate it is likely to be due to the cancellation. It can then immediately escalate the interaction to a human agent to arrange an alternative and deliver a superior service."

AI will empower customer service agents

While the adoption of AI will increase, these technologies will only serve to augment the role of the human agent at the front line of delivering 'wow' customer experiences:

"AI technologies will enable our customer service agents to focus on the customer interactions where the human touch is needed the most. This gives them greater job satisfaction, enabling them to focus on VIP customers and high priority enquiries, as well as focus on more strategic contributions within organisations," said Clive Simpson, Head of Service Management, CDL.

Twenty-eight percent of spending within key enterprise IT markets will shift to the cloud by 2022, up from 19 percent in 2018, according to Gartner, Inc. Growth in enterprise IT spending on cloud-based offerings will be faster than growth in traditional, non-cloud IT offerings. Despite this growth, traditional offerings will still constitute 72 percent of the addressable revenue for enterprise IT markets in 2022, according to Gartner forecasts.

“The shift of enterprise IT spending to new, cloud-based alternatives is relentless, although it’s occurring over the course of many years due to the nature of traditional enterprise IT,” said Michael Warrilow, research vice president at Gartner. “Cloud shift highlights the appeal of greater flexibility and agility, which is perceived as a benefit of on-demand capacity and pay-as-you-go pricing in cloud.”

More than $1.3 trillion in IT spending will be directly or indirectly affected by the shift to cloud by 2022, according to Gartner (see Table 1). Providers that are able to capture this growth will drive long-term success through the next decade.

Gartner recommends that technology providers use cloud shift as a measure of market opportunity. They should assess growth rates and addressable market size opportunities in each of the four cloud shift categories: system infrastructure, infrastructure software, application software and business process outsourcing.

Table 1: Cloud Shift Proportion by Category

|

| 2018 | 2019 | 2020 | 2021 | 2022 |

| System infrastructure | 11% | 13% | 16% | 19% | 22% |

| Infrastructure software | 13% | 15% | 17% | 18% | 20% |

| Application software | 34% | 36% | 38% | 39% | 40% |

| Business process outsourcing | 27% | 28% | 29% | 29% | 30% |

| TOTAL | 19% | 21% | 24% | 26% | 28% |

Source: Gartner (August 2018)

The largest cloud shift prior to 2018 occurred in application software, particularly driven by customer relationship management (CRM), according to Gartner. CRM has already reached a tipping point where a higher proportion of spend occurs in cloud than in traditional software. This trend will continue and expand to cover additional application software segments, including office suites, content services and collaboration services, through to the end of 2022. Application software will retain the highest percentage of cloud shift during this period.

By 2022, almost one-half of the addressable revenue will be in system infrastructure and infrastructure software, according to Gartner. System infrastructure will be the market segment that will shift the fastest between now and 2022 as current assets reach renewal status. Moreover, it currently represents the market with the least amount of cloud shift. This is due to prior investments in data center hardware, virtualization and data center operating system software and IT services, which are often considered costly and inflexible.

“The shift to cloud until the end of 2022 represents a critical period for traditional infrastructure providers, as competitors benefit from increasing cloud-driven disruption and spending triggers based on infrastructure asset expiration,” said Mr. Warrilow. “As cloud becomes increasingly mainstream, it will influence even greater portions of enterprise IT decisions, particularly in system infrastructure as increasing tension becomes apparent between on- and off-premises solutions.”

According to the International Data Corporation (IDC) Worldwide Quarterly Cloud IT Infrastructure Tracker, vendor revenue from sales of infrastructure products (server, enterprise storage, and Ethernet switch) for cloud IT, including public and private cloud, grew 48.4% year over year in the second quarter of 2018 (2Q18), reaching $15.4 billion. IDC also raised its forecast for total spending (vendor recognized revenue plus channel revenue) on cloud IT infrastructure in 2018 to $62.2 billion with year-over-year growth of 31.1%.

Quarterly spending on public cloud IT infrastructure has more than doubled in the past three years to $10.9 billion in 2Q18, growing 58.9% year over year. By end of the year, public cloud will account for the majority, 68.2%, of the expected annual cloud IT infrastructure spending, growing at an annual rate of 36.9%. In 2Q18, spending on private cloud infrastructure reached $4.6 billion, an annual increase of 28.2%. IDC estimates that for the full year 2018, private cloud will represent 14.8% of total IT infrastructure spending, growing 20.3% year over year.

The combined public and private cloud revenues accounted for 48.5% of the total worldwide IT infrastructure spending in 2Q18, up from 43.5% a year ago and will account for 46.6% of the total worldwide IT infrastructure spending for the full year. Spending in all technology segments in cloud IT environments is forecast to grow by double digits in 2018. Compute platforms will be the fastest growing at 46.6%, while spending on Ethernet switches and storage platforms will grow 18.0% and 19.2% year over year in 2018, respectively. Investments in all three technologies will increase across all cloud deployment models – public cloud, private cloud off-premises, and private cloud on-premises.

The traditional (non-cloud) IT infrastructure segment grew 21.1% from a year ago, a rate of growth comparable to 1Q18 and exceptional for this market segment, which is expected to decline in the coming years. At $16.4 billion in 2Q18 it still accounted for the majority, 51.5%, of total worldwide IT infrastructure spending. For the full year, worldwide spending on traditional non-cloud IT infrastructure is expected to grow by 10.3% as the market goes through a technology refresh cycle, which will wind down by 2019. By 2022, we expect that traditional non-cloud IT infrastructure will only represent 44.0% of total worldwide IT infrastructure spending (down from 51.5% in 2018). This share loss and the growing share of cloud environments in overall spending on IT infrastructure is common across all regions.

"As share of cloud environments in the overall spending on IT infrastructure continues to climb and approaches 50%, it is evident that cloud, which once used to be an emerging sector of the IT infrastructure industry, is now the norm. One of the tasks for enterprises now is not only to decide on what cloud resources to use but, actually, how to manage multiple cloud resources," said Natalya Yezhkova, research director, IT Infrastructure and Platforms. "End users' ability to utilize multi-cloud resources is an important driver of further proliferation for both public and private cloud environments."

All regions grew their cloud IT Infrastructure revenue by double digits in 2Q18. Asia/Pacific (excluding Japan) (APeJ) grew revenue the fastest, by 78.5% year over year. Within APeJ, China's cloud IT revenue almost doubled year over year, growing at 96.4%, while the rest of Asia/Pacific (excluding Japan and China) grew 50.4%. Other regions among the fastest growing in 2Q18 included Latin America (47.4%), USA (44.9%), and Japan (35.8%).

| Top Companies, Worldwide Cloud IT Infrastructure Vendor Revenue, Market Share, and Year-Over-Year Growth, Q2 2018 (Revenues are in Millions) | |||||

| Company | 2Q18 Revenue (US$M) | 2Q18 Market Share | 2Q17 Revenue (US$M) | 2Q17 Market Share | 2Q18/2Q17 Revenue Growth |

| 1. Dell Inc | $2,386 | 15.5% | $1,433 | 13.8% | 66.4% |

| 2. HPE/New H3C Group** | $1,665 | 10.8% | $1,389 | 13.3% | 19.8% |

| 3. Cisco | $1,016 | 6.6% | $947 | 9.1% | 7.2% |

| 4. Lenovo* | $822 | 5.3% | $254 | 2.4% | 223.5% |

| 4. Inspur* | $691 | 4.5% | $299 | 2.9% | 131.0% |

| ODM Direct | $5,337 | 34.6% | $3,508 | 33.7% | 52.2% |

| Others | $3,525 | 22.8% | $2,577 | 24.8% | 36.8% |

| Total | $15,442 | 100.0% | $10,407 | 100.0% | 48.4% |

| IDC's Quarterly Cloud IT Infrastructure Tracker, Q2 2018 | |||||

F5 Networks has unveiled EMEA's first ever Future of Multi-Cloud (FOMC) report, highlighting game-changing trends and charting adaptive best practice over the next five years.

The F5 commissioned report was conducted by the Foresight Factoryand features exclusive input from influential global cloud experts specialising in entrepreneurialism, cloud architecture, business strategy, industry analysis, and relevant technological consultancy.

"The Future of Multi-Cloud report is a unique vision for how organisations can successfully navigate an increasingly intricate, cloud-centric world. The stakes are higher than ever, and businesses that ignore the power of the multi-cloud today will significantly struggle in the next five years," said Vincent Lavergne, RVP, Systems Engineering, F5 Networks.

The FOMC report comes at a time of significant cloud receptivity.

According to the figures cited in the FOMC report, 81% of global enterprises claim to have a multi-cloud strategy in place. (1) Meanwhile, the Cisco Global Cloud Index estimates that 94% of workloads and compute instances will be processed by cloud data centres by 2021. (2)

"The multi-cloud is a game-changer for both business and consumers. It will pave the way for unprecedented innovation, bringing cloud architects, DevOps, NetOps and SecOps together to pioneer transformational services traditional infrastructures simply cannot deliver. The outlook for the coming years is bright and full of potential," said Josh McBain, Director of Consultancy, Foresight Factory.

A new era of business innovation

The FOMC consensus is that those delaying multi-cloud adoption will become increasingly irrelevant.

New levels of service specialisation will increasingly allow enterprises to find the best tools for their specific needs, enabling seamless scaling and rapid service delivery innovations. Technologies set to drive this transition include serverless architectures, as well as artificial intelligence-powered orchestration layers and configuration tools to aid data-driven decision-making. Fear of vendor lock-in is expected to continue as a key justification for multi-cloud investments.

"The multi-cloud ramp-up is one of the ultimate wake-up calls in internal IT to get their act together," said Eric Marks, VP of Cloud Consulting at CloudSpectator and a FOMC contributor.

"One of the biggest transformative changes is the realisation of what a high performing IT organisation is and how it compares to what they have. Most are finding their IT organisations are sadly underperforming."

Plugging the skills gap

The FOMC report cautions that the multifaceted logistics of monitoring multiple cloud services, containers, APIs and other processes can be daunting and inhibit technology uptake. A significant skill gap also exists to handle this added complexity both now and into the future. Across the world, available workforces are not keeping with the pace of innovation, with potentially damaging results to business productivity and digital transformation capacity. Knowledge silos or lack of collaboration within businesses may further exacerbate multi-cloud apprehension.

Looking ahead, the FOMC report urges the business community to do more to "tap into the kaleidoscopic potential of youth and promote industry diversity." It also calls on the IT industry to better promote the use of smart, context-driven and automated solutions that can spark attractive new career opportunities and free existing workforces to focus on more strategic and rewarding work.

Safeguarding the future and building trust

Attack surfaces are expanding at exponential rates. The FOMC emphasises how cybercriminals are no longer tinkering hobbyists but instigators of a new "hacking economy" that can outpace businesses innovation. To remain competitive, organisations need to confront the security challenge head on without compromising quality of service. Implementing a robust, future-proofed ecosystem of integrated security and cloud solutions will help to build end-to-end IT services that give key stakeholders greater context, control, and visibility into the threat landscape.

Coping with compliance

The FOMC posits that the intricacies of regulating a borderless digital world is one the biggest challenges facing governments today. Swift and substantive collaborative action between business and government is needed. Ultimately, the FOMC report believes a global standard for data protection is required within five years.

"Eventually, today's tech-conscious consumers and customers will only want to be associated with the most trustworthy data handlers. There is now a big opportunity to differentiate with best practice and service delivery, particularly in the context multi-cloud's potentialadded McBain.

Ninety percent of European organisations expect IT budgets to grow or stay steady in 2019.

Spiceworks has published its annual 2019 State of IT Budgets report that examines technology investments in organisations across North America and Europe. The results show 90 percent of European companies expect their IT budgets to grow or remain flat in 2019. Among those planning to increase budgets, 65 percent are driven by the need to upgrade outdated IT infrastructure.

The results show 34 percent of European organisations expect their IT budgets to increase in 2019, while 56 percent expect them to remain flat year over year. Organisations that expect IT budget increases next year anticipate a 21 percent increase on average. Only 6 percent of European companies expect a decrease in IT budgets in 2019, compared to 9 percent in 2018.

Among European businesses boosting their IT budgets in 2019, growing security concerns were the second biggest driver of budget increases behind the need to upgrade outdated infrastructure. Sixty percent of European businesses reported growing security concerns as a top driver of budget increases, followed by an increased priority on IT projects at 57 percent. Compared to their counterparts in North America, European businesses were more likely to increase IT budgets due to changes in regulations, such as GDPR (45 percent in Europe vs. 30 percent in North America), and due to currency fluctuations (14 percent in Europe vs. 5 percent in North America).

IT budget allocations: European organisations boost hardware and cloud budgets while reducing software spend

European organisations plan to spend 36 percent of their budgets on hardware purchases, up by 5 percentage points year over year. Software budget allocations decreased by 2 percentage points to 24 percent in 2019, while cloud service budgets increased by 1 percentage point to 20 percent. Budgets for managed IT services remained steady year over year at 16 percent.

Compared to their North American counterparts, European businesses are allocating more of their budgets toward hardware and managed IT services, and slightly less on software.

European budget highlights within each category include:

Technology purchase decisions: ITDMs make the decision, BDMs sign the checks

Spiceworks also examined the roles various individuals play in the technology purchase process. Business line directors are involved in the technology purchase decisions in 37 percent of European companies, the owner/CEO is involved in 35 percent of organisations, and finance managers are involved in 30 percent. ?

However, IT decision makers (ITDMs) are more likely to be the sole decision maker across all technology categories when compared to business decision makers (BDMs). ITDMs are most likely to be the sole decision maker for networking solutions, backup/recovery, computing devices and physical server purchase decisions. When involved, BDMs are more likely to either sign off on final approval or veto the deal after ITDMs have made their vendor and product selection.

“When it’s time to upgrade or purchase new tech, organisations entrust IT professionals to find the best solution to meet the needs of the business,” said Peter Tsai, senior technology analyst at Spiceworks. “For major tech purchases, the CEO or finance manager may be involved to sign on the dotted line, but in most cases, it’s the IT decision maker who conducts the in-depth research, evaluates the vendors, and ultimately chooses the best solution for the business.”

Server Technology's multiple award winning High Density Outlet Technology (HDOT), has been improved with our Cx outlet. The HDOT Cx PDU welcomes change as data center equipment is replaced. The Cx outlet is a UL tested hybrid of the C13 and C19 outlets, accommodating both C14 and C20 plugs. This innovative design reduces the complexity of the selection process while lowering end costs.

It sounds suspiciously logical, and very, very sensible, but the revelation that Channel organisations need to pay more attention to what their customers want was the major theme for the recent Managed Services + Hosting (MSH) Summit.

From the first keynote onwards, the importance of the customer was centre stage at the MSH Summit. While customer relations has always been important, it seems that the ‘sell and forget’ mentality of the traditional channel model – perhaps not strictly accurate, but rather too prevalent nonetheless – guarantees failure. Customers want their channel suppliers to understand their needs, to work alongside them and, above all, to be able to trust the. After all, with the responsibility for IT increasingly devolving from the end user (who, once upon a time, bought tin and fitted it all together, or got an SI to do this), to the channel and service providers, who not only sell solutions, but then have to run and support them on behalf of the customer. In simple terms, MSPs are becoming the IT departments for many end users.

Bearing in mind this sea change, MSPs need to spend time and effort on researching their (potential) customers’ needs – right from how they choose to source their IT, what IT solutions they require, and what level of ongoing support needs to be provided.

First up, customers want to know about their potential suppliers. Although the quoted figure might vary, it seems safe to report that over half of the end user buying cycle takes place before any human contact is established. This means that customers are researching possible suppliers via a mixture of traditional and modern, multi-media channels. A muddled, messy and out-of-date website is unlikely to have new customers knocking down an MSPs’ virtual or physical door, yet all too many suppliers seem content to operate their cyber-presence in this way.

What is required is clear, concise and accurate information about services the MSP provides – with clear emphasis on what makes the organisation different and not just another ‘me too’ provider. Customer service is a major potential differentiator, with more and more Cloud and managed services being based on the commoditised services of the web giants. Vertical industry specialisation is another valid approach – with an MSP demonstrating how well they know the problems faced by a specific industry sector, and how they can help offer targeted solutions.

Additionally, there needs to be the realisation that the IT buying cycle is no longer the sole remit of the IT department. Increasingly, any or all company departments are having a say in the specification and purchasing of IT products and services. Yes, the IT department might have the final say, or veto, but it’s no longer enough to target the professionals when it comes to selling – well-informed ‘amateurs’, armed with knowledge acquired from the technology they use in their private lives, can be key influencers in the final purchasing decision.

To summarise – MSPs need to review their customer intelligence, review their own positioning and review their marketing. The end objective being, as more than one speaker explained, is that this new sales model requires an ‘outside in’ approach – the customer’s needs come first, with everything else falling into place around these.

In terms of specific opportunities, the security market is clearly the biggest market right now. While huge end user corporations might have their own security professionals capable of carrying the fight to the hackers, the SME market is especially vulnerable to cyber attacks, as it has little or no in-house security expertise. No one can protect against zero day attacks, but a surprising amount of companies have a surprising amount of vulnerabilities to existing, well-known problems. For example, basic patch management practices are alarmingly absent from many organisations (the infamous NHS attack being one of the more high profile casualties of failing to apply available patches).

Another opportunity for MSPs is, somewhat depressingly, the fact that the incumbent IT supplier may be doing such a bad job that the customer will welcome the approach of an organisation that appears to know what it is doing and can back up this talk with a concrete solution that delivers what is required and, importantly, continues to deliver. In one quoted example, a customer had been unable to access their data for three days, had to contact their IT supplier to tell them this, and the supplier then took a further three days to ‘rescue’ just some of the data. With the bar set so low, a well-organised and resourced MSP might just find plenty of opportunities to win new business!

How these opportunities are developed is crucial to success. Taking weeks, or even months, to talk to the customer from board-level right down to the office/shop floor, to understand exiting problems, future requirements, the nice-to-haves and the essentials, should ensure that the proposed solution meets the customer’s expectations – especially if a technology roadmap has been agreed, with a timeline and key outcomes defined. Such a comprehensive approach to sales should pay-off for both supplier and customer.

And once the business has been won, MSPs need to ensure that they keep in regular, meaningful contact with the customer. One speaker explained how his organisation contacts the customer weekly and monthly, as well as for quarterly and annual strategic reviews.

As for the vertical approach, well, whisper it quietly, in a room where grey, or at least greying, hair and suits proliferated (myself included), it was refreshing to listen to two young entrepreneurs – hosting supplier and customer – talk about the digital agency market. Robert Belgrave and Jim Bowes were the clearest evidence of the day that the IT market is changing rapidly and frequently and that, if suppliers and their customers don’t change with it, then the abyss awaits.

Most notable was Robert’s admission that his hosting company’s initial success was under threat from the commoditisation offered by the hosting giants, so they decided to work with this change, establishing a consulting service that would guide customers – new and potential – through the managed services and cloud maze. The result is, that while some customers stick with Robert’s company’s own hosting service, others go the commoditised route, but with added-value services and support being supplied by Wirehive.

So, flexibility is, perhaps, the final piece of the MSP jigsaw. Of course, planning requires certain assumptions to be made as to the technologies and issues that end users need to address. However, game-changing disruption is, potentially, only a sleep away. Wake up to find that the rules have changed and you can either waste time moaning about how unfair the new rules are, or spend your time and energy adapting to the new rules. And that applies for MSPs and their customers alike.

In summary, wherever you sit in the IT ecosystem, if you’re not excited by the future, then it just might be time to head for the door marked ‘EXIT’.

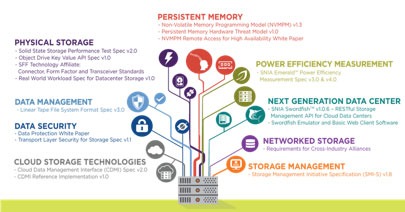

The Storage Networking Industry Association (SNIA) is the largest storage industry association in existence, and one of the largest in IT. It is comprised of 180 leading industry organizations, over 2,000 active contributing members along with more than 50,000 IT and storage professionals worldwide.

By Michael Oros, SNIA Executive Director.

Today, SNIA is the recognized global authority for storage leadership, standards and technology expertise. As such, our mission is to develop and promote vendor-neutral architectures, standards, best practices and educational services that facilitate the efficient management, movement and security of information.

These initiatives are vital in the dynamic fields of data and information. IT is constantly evolving and shifting to provide new efficiencies and valuable business insight with increased data set sizes and larger pools of computational and storage resources. Virtualization, Persistent Memory, the cloud, software-defined data center, hyperconvergence, computational storage, the Internet of Things (IoT), Artificial Intelligence (AI) and machine learning are just a few of the latest waves of innovation that are disrupting traditional IT approaches and are continuing to transform all industries. SNIA stays alert to these trends and is involved on the leading edge of such standards and innovation needs. That enables us to isolate areas that need attention such as omitted interoperability standards or nascently understood technology areas that require industry education. In some cases, it is up to SNIA to champion technologies that open up new vistas for the storage industry and beyond.

These nine technology focus areas are actively supported by Technical Work Groups and Storage Communities that we refer to as Forums and Initiatives. Individuals from all facets of IT dedicate themselves to programs that unite the storage industry with the purpose of taking storage technologies to the next level.

Through these nine focus areas, SNIA leads the storage industry in the development of standards and driving broad adoption of new technologies. Our members pave the way enabling innovation by establishing the best way for platforms to interoperate. It is up to SNIA to provide a forum that aligns the strategic business objectives of the diverse storage vendor community with the need for interoperability and worldwide standards. By contributing to these standards and interoperability initiatives, our members are fostering timely technology adoption that delivers real value and benefit to IT organizations globally.

To stay informed and updated on how SNIA is accelerating the adoption of next-generation storage technologies, please visit www.snia.org. You can also hear the latest from SNIA by regularly viewing our storage blogs at www.sniablog.org, SNIA YouTube channel and to learn about SNIA global events and meet-ups please visit www.snia.org/snia_events.

This month’s DCA journal theme is focused on IOT, Smart Cities, Edge Computing and Cloud. These are all topics which are intrinsically linked to one another and this month’s articles reflect this. Interxion report on the role an urban data centre plays in a smart city, Vertiv focuses on Edge computing, IMS take us back to IOT basics and Dr Marcin Budak from Bournemouth University runs through a day in the life of a smart city commuter, however first just a few thoughts from me to kick off with….

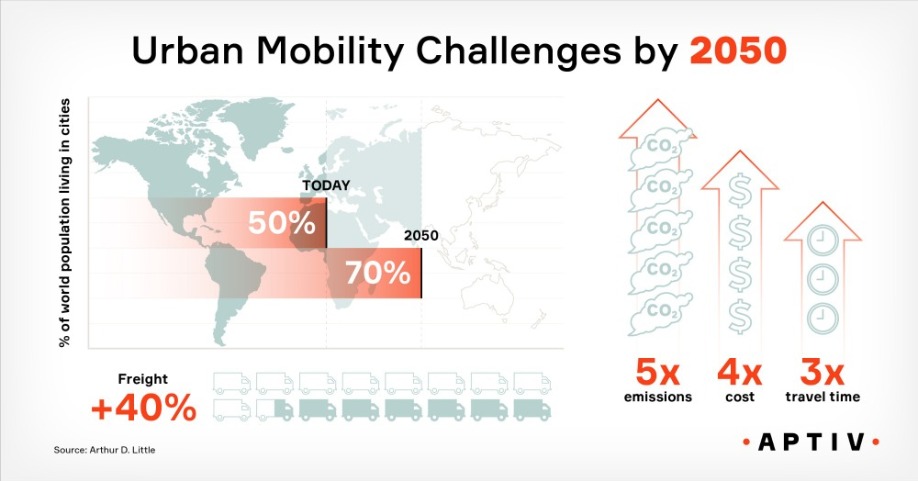

It has been reported recently that by 2050, 66 percent of the world’s population will live in urban locations. It’s important to address the way services and technology are delivered to the growing inner-city population and ensure that these are provided in the most cost-effective manner. In other words, we need to work smarter not harder.

At the forefront of every city’s concerns is the safety of its citizens. Be that from a terrorist threat or from general criminal activity which is exponentially linked to increased population density. One area which is seeing a rapid acceleration to combat crime is the use of CCTV cameras. IoT can now increase the ability to monitor citizens and keep them safe.

Some would argue this represents nothing more than a Big Brother invasion of an individual’s privacy, others would argue that if ‘you have nothing to hide you have nothing to fear’. Clearly the use of CCTV isn’t anything new, however, with an improved digital infrastructure comes the ability to increase the quality and functionality of surveillance devices to deliver so much more.

Rather than the grainy images we’ve all seen on Crimewatch, the roll out of new HD facial recognition technology could identify suspicious or dangerous individuals prior to a crime being committed. It could also help to quickly identify and prosecute individuals once the unlawful act is committed. The technology to identify a crime in progress is developing further, although not yet needed in many parts of Europe, I read that in Washington D.C. for example, they have begun installing “gunshot sound sensors” on CCTV masts. These sensors alert authorities almost immediately to the sound of gunshots. Rather than having to be called out the Police and Emergency Services are alerted to fire arms incidents in real time which decreases the response times dramatically.

The focus by government and local authorities on IoT based initiatives is not just restricted to increasing the security of citizens but equally driven by the need to increase the efficiency of how community services are delivered and in turn reduce the cost of delivering them, with a “more for less” motto being very much the name of the game.

A quick Google search revealed an increasing number of pilot projects are being launched, all are being closely monitored with a view to both regional and national rollout. I found projects such as Smart Parking, Smart Street Lighting, Smart Roads (covering everything from congestion, accidents or road damage). The list continues I found numerous trials relating to everything from Smart-bins, air pollution, seismic activity to burst water pipe sensors!

It’s not just the list of potential applications which is endless but also the data these street level devices will be generating. A great deal of this data will need to be collected, stored and analysed somewhere, this will be done either locally at the edge or backhauled to larger centrally located hosting facilities.

These are exciting times for all DCA members and the potential business opportunities which exist for both DC providers and suppliers is plain to see in support of this IoT and Smart City revolution which is only just starting to warm up.

We have decided to switch things around for the next edition of the DCA Journal with a dedicated focus on Liquid Cooling. If you have a view in this subject and would like to submit and article for review please email Amanda McFarlane amandam@dca-global.org or call on 0845 873 4587 for more details, deadline is the 20th October 2018.

Finally, I would like to thank everyone who supported and took part in the DCA Charity Golf Day which this year was held at the Warwickshire PGA Golf Course. Many thanks to the team at Angel Business Communications for helping to organise the event for us. Look out for the pictures to follow and don’t forget to pencil it in for next year if you want to take part.

We are moving to a 5G world. It will be one where data is vital to our daily lives. Data now needs to be instantaneous. Any delay, even for an nth of a second, could be catastrophic in an always-on world. That nth could be a tenth of a second, nothing more. But no matter how fast your fibre connection, if information has to travel across several continents to get to your door, there is going to be latency – a time drag, an nth of a second.

Imagine sitting in an autonomous car at 70mph with vehicles whizzing all around you. That nth of a second could be the difference between a collision and a smooth journey home. You are a gamer in an online world. That nth of a second is the difference between a clean shot and a clear miss.

Smart cities, the Internet of Things (IoT), connected cars, online gaming and machine-to-machine (M2M) communications are all driving the demand for instant connections. This, in turn, is driving the trend to edge computing. It’s a term used to describe local datacentres that are smaller than the Hyperscale centres that have come to characterise the data centre industry, although more critical to the successful deployment of a ‘Smart Anything’.

But on-the-edge computing, as the industry calls it, removes that latency and provides the instant connectivity the modern world and mission critical applications demand.

History to lead the future

As a result, the edge will both benefit from, and drive the development of, more reliable and faster internet connections. It makes for a shared future between the edge and 5G. However, it’s not just the future that connects them - these technologies could in fact benefit from key learnings in each other’s past evolution. The implementation of 4G LTE (long-term evolution) is a great example. Whereas 3G could connect society more easily, 4G truly brought high-speed data connectivity to the masses. It expanded quickly and became integral to society’s day-to-day life.

This illustrates that businesses and society will eagerly adopt any new developments in 5G as well. Given its correlation with the edge, it could imply that the edge needs to be 5G ready. But how can you be ready for something that’s undefined at the moment? This is not impossible, as it primarily means the edge needs to be communication ready, and this is already feasible. Vertiv can, for example, offer fully integrated and scalable systems with high-quality communications capabilities.

Another key learning from the 3G/4G/5G evolution is its inherent influence on infrastructure development. Whereas 3G allowed for places of leisure, such as McDonald’s and airports, to become connectivity hubs, 4G enabled the further implementation of technology globally, both in cities and rural areas. With the boost in implementation and usage also came an increased need for more robust, secure edge resources.

Similarly, the edge will see a change in infrastructure needs with the progress of 5G. We don’t know what 5G cellular architectures will look like, yet. However, we know they will enable more consumer applications, as well as potential other applications we haven’t imagined yet. This means that telco, cloud and colocation architectures will evolve to meet 5G and edge needs. It is expected that cloud and colocation companies will continue to expand their footprint to provide services closer to users. This, combined with the continued deployment of edge-ready local infrastructure, matched to archetypes and use cases, will create an edge ecosystem that extends far beyond traditional small-space edge deployments.

The current edge

It seems like the direction of future developments is not as mysterious as one might think. So how ready is the industry for 5G and edge computing to really take hold? In an effort to understand and foresee future demand, Vertiv has analysed a variety of emerging use cases, resulting in the ‘Edge Archetypes’ report. The report identified the following four primary edge archetypes:

Developments in 5G cellular networks will both enable and drive the evolution of these archetypes, and the edge in general, to allow for the implementation of edge-dependent applications.

It might seem like these developments are still out of reach and need to be government-driven due to its vastness. However, the commercial sector is more likely to truly drive this evolution with the development of consumer-oriented services and apps – increasing the demand of the edge’s capabilities.

As a result, with the available knowledge at hand and the opportunity for the commercial sector to drive demand, the realisation of edge computing and 5G cellular networks might just be closer than you think.

The alarm on your smart phone went off 10 minutes earlier than usual this morning. Parts of the city are closed off in preparation for a popular end of summer event, so congestion is expected to be worse than usual. You’ll need to catch an earlier bus to make it to work on time.

The alarm time is tailored to your morning routine, which is monitored every day by your smart watch. It takes into account the weather forecast (rain expected at 7am), the day of the week (it’s Monday, and traffic is always worse on a Monday), as well as the fact that you went to bed late last night (this morning, you’re likely to be slower than usual). The phone buzzes again – it’s time to leave, if you want to catch that bus.

While walking to the bus stop, your phone suggests a small detour – for some reason, the town square you usually stroll through is very crowded this morning. You pass your favourite coffee shop on your way, and although they have a 20% discount this morning, your phone doesn’t alert you – after all, you’re in a hurry.

After your morning walk, you feel fresh and energised. You check in at the Wi-Fi and Bluetooth-enabled bus stop, which updates the driver of the next bus. He now knows that there are 12 passengers waiting to be picked up, which means he should increase his speed slightly if possible, to give everyone time to board. The bus company is also notified, and are already deploying an extra bus to cope with the high demand along your route. While you wait, you notice a parent with two young children, entertaining themselves with the touch-screen information system installed at the bus stop.

Once the bus arrives, boarding goes smoothly: almost all passengers were using tickets stored on their smart phones, so there was only one time-consuming cash payment. On the bus, you take out a tablet from your bag to catch up on some news and emails using the free on-board Wi-Fi service. You suddenly realise that you forgot to charge your phone, so you connect it to the USB charging point next to the seat. Although the traffic is really slow, you manage to get through most of your work emails, so the time on the bus is by no means wasted.

The moment the bus drops you off in front of your office, your boss informs you of an unplanned visit to a site, so you make a booking with a car-sharing scheme, such as Co-wheels. You secure a car for the journey, with a folding bike in the boot.

Your destination is in the middle of town, so when you arrive on the outskirts you park the shared car in a nearby parking bay (which is actually a member’s unused driveway) and take the bike for the rest of the journey to save time and avoid traffic. Your travel app gives you instructions via your Bluetooth headphones – it suggests how to adjust your speed on the bike, according to your fitness level. Because of your asthma, the app suggests a route that avoids a particularly polluted area.

After your meeting, you opt to get a cab back to the office, so that you can answer some emails on the way. With a tap on your smartphone, you order the cab, and in the two minutes it takes to arrive you fold up your bike so that you can return it to the boot of another shared vehicle near your office. You’re in a hurry, so no green reward points for walking today, I’m afraid – but at least you made it to the meeting on time, saving kilograms of CO2 on the way.

Get real

It may sound like fiction, but truth be told, most of the data required to make this day happen are already being collected in one form or another. Your smart phone is able to track your location, speed and even the type of activity that you’re performing at any given time – whether you’re driving, walking or riding a bike.

Meanwhile, fitness trackers and smart watches can monitor your heart rate and physical activity. Your search history and behaviour on social media sites can reveal your interests, tastes and even intentions: for instance, the data created when you look at holiday offers online not only hints at where you want to go, but also when and how much you’re willing to pay for it.

Personal devices aside, the rise of the Internet of Things with distributed networks of all sorts of sensors, which can measure anything from air pollution to traffic intensity, is yet another source of data. Not to mention the constant feed of information available on social media about any topic you care to mention.

With so much data available, it seems as though the picture of our environment is almost complete. But all of these datasets sit in separate systems that don’t interact, managed by different entities which don’t necessarily fancy sharing. So although the technology is already there, our data remains siloed with different organisations, and institutional obstacles stand in the way of attaining this level of service. Whether or not that’s a bad thing, is up to you to decide.

Think about some of the busiest cities in the world. What sort of picture springs to mind? Are you thinking about skyscrapers reaching into the atmosphere, competing for space and attention? Perhaps you’re thinking about bright lights, neon signs and the hustle and bustle of daily life. Chances are that whichever city you’re thinking about – New York, Tokyo, Singapore – is actually a smart city.

Smart cities are so-called based on their performance against certain criteria – human capital (developing, attracting and nurturing talent), social cohesion, economy, environment, urban planning and, very importantly, technology. Speaking specifically to this last point, real-life smart cities are less about flying cars and more about how sensors and real-time data can bring innovation to citizens.

And the key to being ‘smart’ is connectivity, to ensure that whatever citizens are trying to do – stream content from Netflix, drive an autonomous vehicle or save money through technology use in the home – they can do so with ease, speed and without disruption. Crucial to this, and the often-overlooked piece in the connectivity puzzle – the urban data centre. Urban data centres are the beating heart of all modern-day smart cities, and we’ll explore why here.

Becoming smart

Just as Rome wasn’t built in a day, neither was a smart city. Time for some number crunching. According to the United Nations, there will be 9.8 billion of us on Earth by 2050. Now, consider the number of devices you use on a daily basis, or within your home – smartphones, laptops, wearables, smart TVs or even smart home devices. Now multiply that by the amount of people there will be in 2050, and you get a staggering number of devices all competing for the digital economy’s most precious commodity – internet access. In fact, Gartner predicted that by 2020, there will be 20.4 billion connected devices in circulation. At a smart city level, this means being able to translate this escalating demand for access to the fastest connection speeds to unparalleled supply. Connectivity is king, and without it cities around the world will come screeching to a halt. To keep up with the pace of innovation, we need a connectivity hub that will keep our virtual wheels turning – the data centre.

Enter the urban data centre

In medieval times, cities protected their most prized assets, people and territories by building strongholds to bolster defenses and ward off enemies. These fortresses were interconnected by roads and paths that would enable the exchange of people and goods from neighbouring towns and cities. In today’s digital age, these strongholds are data centres; as we generate an eyewatering amount of data from our internet-enabled devices, data centres are crucial for holding businesses’ critical information, as well as enabling the flow of data and connectivity between like-minded organisations, devices, clouds and networks. As we build more applications for technology – such as those you might find in a typical smart city – this flow needs to be smoother and quicker than ever.

Consider this – according to a report by SmartCitiesWorld, cities consume 70% of the world’s energy and by 2050 urban areas are set to be home to 6.5 billion people worldwide, 2.5 billion more than today. With this is mind, it’s important that we address areas such as technology, communications, data security and energy usage within our cities.

This is why urban data centres play a key role in the growth of smart cities. As organisations increasingly evolve towards and invest in digital business models, it becomes ever more vital that they house their data in a secure, high-performance environment. Many urban data centres today offer a diverse range of connectivity and cloud deployment services that enable smart cities to flourish. Carrier-neutral data centres even offer access to a community of fellow enterprises, carriers, content delivery networks, connectivity services and cloud gateways, helping businesses transform the quality of their services and extend their reach into new markets.

The ever-increasing need for speed and resilience is driving demand for data centres located in urban areas, so that there is no disruption or downtime to services. City-based data centres offer businesses close proximity to critical infrastructure, a richness of liquidity and round-the-clock maintenance and security. Taking London as an example, the city is home to almost one million businesses, including three-quarters of the Fortune 500 and one of the world’s largest clusters of technology start-ups. An urban data centre is the perfect solution for these competing businesses to access connectivity and share services, to the benefit of the city’s inhabitants and the wider economy.

The future’s smart

London mayor Sadiq Khan recently revealed his aspirations for London to become the world’s smartest city by 2020. While an ambitious goal, London’s infrastructure can more than keep pace. Urban data centres will play a significant role in helping the city to not only meet this challenge, but become a magnet for ‘smart tech’ businesses to position themselves at the heart of the action. The data centre is already playing a critical role – not just in London, but globally – in helping businesses to innovate and achieve growth. And as cities become more innovative with technological deployments, there’s no denying that smart cities and urban data centres are a digital marriage made in heaven.

The application of IoT is booming with new use cases arising near enough daily. But, contrary to its growth, the sector risks inertia if businesses lose sight of the key objectives digitisation was founded upon – improving day-to-day experiences.

Yes, a big part of IoT is creating more efficient processes. But those efficiencies must translate into issues that resonate with customers, from the quality of the product to meeting environmental pledges and reducing wastage to truly deliver; something that can’t be achieved by automating processes alone, but by automating outcomes – as Jason Kay, CCO, IMS Evolve, explains.

Digitisation Falters

Pinpointing the reason for organisations’ growing failure to make the expected progress towards successful digitisation is a challenge. Choice fatigue, given the diversity of innovative technologies? Over ambitious projects? An insistence by some IT vendors that digitisation demands high cost, high risk rip and replace strategies? In many ways, each of these issues is playing a role; but they are the symptoms not the cause. The underpinning reason for the stuttering progress towards effective digitisation is that the outcomes being pursued are simply not aligned with the core purposes of the business.

Siloed, vertically focused digitisation developments typically focus on short-term efficiency and process improvements. They are often isolated, which means as and when challenges arise, it is a simple management decision to call time on the development: why persist with a digitisation project that promised a marginal gain in process efficiency at best, if it fails to address core business outcomes such as customer experience?

Accelerating the digitisation of an organisation requires a different approach and brave new thinking. While disruptive projects and strategies can prove threatening to existing business models – when executed correctly – can in fact create opportunity for new business models, exploration and a new approach to the market. By considering and focusing on the core aspects of the business, not only can opportunities to drive down cost be identified, but also deliver measurable value in line with clearly defined outcomes.

Reconsidering Digitisation

In many ways the IT industry is complicit in this situation: on one hand offering the temptation of cutting-edge and compelling new technology, from robots to augmented reality, and on the other insisting that digitisation requires multi-million pound investments, complete technology overhaul and massive disruption to day-to-day business. It is therefore obviously challenging for organisations to create viable, deliverable long-term digitisation strategies; and this confusion will continue if organisations focus on the novelty element and fail to move away from single, process led goals.

Achieving the true potential digitisation offers will demand cross-organisational rigour that focuses on the business’ primary objectives. Without this rigour and outcome led focus, organisations will not only persist in pointless digitisation projects that fail to add up to a consistent strategy but, concerningly, will also miss the opportunity to leverage existing infrastructure to drive considerable value.

Consider the impact of an IoT layer deployed across refrigeration assets throughout the supply chain to monitor and manage temperature. A process based approach would be focused on improving efficiency and the project may look to utilise rapid access to refrigeration monitors and controls, in tandem with energy tariffs, to reduce energy consumption and cost. However, if such a project is only defined by this single, energy reduction goal, once the initial cost benefits have been achieved there is a risk that the lack of ongoing benefits will resonate with management. Yet digitisation of the cold chain also has a fundamental impact on multiple corporate outcomes, from customer experience to increasing basket size and reducing wastage; it is – or should be – about far more than incremental energy cost reduction.

Supporting Multiple Business Outcomes

Incorrect cooling can have a devastating impact on food quality. From watery yogurt to sliced meat packages containing pools of moisture and browning bagged salad, the result is hardly an engaging brand experience. These off-putting appearances can threaten not only customer perception but also basket size, yet the acceptance of this inefficiency is evident in the excessive supply chain over-compensation. To ensure that the products presented to customers on the shelves are aesthetically appealing, retailers globally rely on overstocking with a view to disposing any poorly presented items. The result is unnecessary overproduction by producers and a considerable contribution to the billions of pounds of food wasted every year throughout the supply chain.

Where does this supply chain strategy leave the brand equity with regards to energy consumption, environmental commitment and minimising waste? Or, for that matter, the key outcomes of improving customer experience, increasing sales and reducing stock? It is by considering the digitisation of the cold chain with an outcomes based approach, a project that embraces not only energy cost reduction but also customer experience, food quality, minimising wastage and supporting the environment, that organisations are able to grasp the full significance, relevance and corporate value.

Furthermore, this is a development that builds on an existing and standard component of the legacy infrastructure. It is a project that can overlay digitisation to drive value from an essentially dull aspect of core retail processes and one that can deliver return on investment, whilst also improving the customer experience.

Reinvigorating Digitisation Strategies

If digitisation is to evolve from point deployments of mixed success, towards an enduring, strategic realisation, two essential changes are required. Firstly, organisations need to consider what can be done with the existing infrastructure to drive value. How, for example, can digitisation be overlaid onto existing control systems to optimise, for example, the way car park lights are turned on and off, to better meet environmental brand equity and reduce costs? In the face of bright, shiny disruptive technologies, it is too easy to overlook this essential aspect of digitisation: the chance to breathe new life and value into existing infrastructure.

Secondly, companies need to determine how to align digitisation possibilities not with single process goals but with broad business outcomes – from a better understanding of macro-economic impacts, all the way back through the supply chain to the farmer to battle the global food crisis, to assessing the impact on the customer experience. And that requires collaboration across the organisation. By involving multiple stakeholders and teams, from energy efficiency and customer experience to waste management, a business not only gains a far stronger business case but a far broader commitment to realising the project.

Combining engaged, cross-functional teams with an emphasis on leveraging legacy infrastructure offers multiple business wins. It enables significant and rapid change without disruption; in many cases digitisation can be added to existing systems and rapidly deployed at a fraction of the cost proposed by rip and replace alternatives. Using proven technologies drives down the risk and increases the chances of delivering quick return on investment, releasing money that can be reinvested in further digital strategies. Critically, with an outcome-led approach, digitisation gains the corporate credibility required to further boost investment and create a robust, consistent and sustainable cross-business strategy.

Sustainability has been a theme within the IT industry since the introduction of the energy star label for hardware. Since 2007 sustainable digital infrastructure became a topic internationally shaped by a variety regional organisations running green IT programs to reduce the footprint of layers within digital infrastructure including datacentres. Reducing the overall footprint of the datacentre industry is an impossible challenge as the rate growth of the adoption of digital services, and therefore the need for infrastructure, has outpaced the rate at which the energy footprint can be reduced. Industry orchestration will be needed if this challenge is to be met and new standards for datacentre sustainability are to be set. Years have passed and excess datacentre heat reuse is still not delivering its promise, often because technology, organisational and operational elements cannot be matched. Recently stakeholders have been aiming to change that by stimulating a new role for datacentres, which is the transformation we still need to see on a large scale: the transformation from energy consumers to flexible energy prosumers in smart cities. This is possible with technologies available today, the future is now!

FROM ENERGY CONSUMER TO ENERGY PRODUCER

The global move to cloud based infrastructures and the Internet of Things (IoT) generates high demand for datacentre capacity and high network loads. The energy demand of datacentres is rising so quickly that it is causing serious issues for energy grid operators, (renewable) energy suppliers and governments. Grid operators and energy suppliers can hardly keep up with the demand in the large datacentre hubs let alone ensure enough renewable energy generation is available where we see the demand. Not only does this raise questions of sustainability on all levels, the demand for flexibility and high loads requires a different approach to the business model of the datacentre. With the ultimate challenge of becoming an energy neutral industry.

The key to resolving this challenge is the adoption of liquid cooling techniques in all its forms. Asperitas is committed to approaching this challenge head-on by taking an active role in discarding the limitations of IT systems and datacentre infrastructures of today to find new ways to drastically improve datacentre efficiency. This can help to develop a future where the datacentre is transformed from energy consumer to energy producer. These excerpts from our whitepaper present our vision of the datacentre of the future. A datacentre that faces the challenges of today and is ready for the opportunities of tomorrow.

With all these advantages, liquid offers solutions that are just not attainable in any other way. This is why liquid is the future for datacentres. But what does this future look like? Which liquid technologies are available and what does this mean for the infrastructure of the datacentre?

In the next chapter, we outline the basic liquid technologies operating in datacentres today. After that we explore the most beneficial environment for these technologies: a hybrid temperature chain. Further on, a model of connected, distributed datacentre environments is introduced. With the dedication to liquid, Temperature Chaining and the distributed datacentre model, the datacentre of the future transforms from energy consumer to energy producer. This approach will drastically reduce the carbon impact of datacentres while stimulating the energy efficiency of unrelated energy consuming industries and consumers.

THE HYBRID INFRASTRUCTURE

The introduction of water into the datacentre whitespace is most beneficial within a purpose-built set-up. This focus for the design of the datacentre must be on absorbing all the thermal energy with water. This calls for a hybrid environment in which different liquid based technologies are co-existing to allow for the full range of datacentre and platform services, regardless of the type of datacentre.

Immersed Computing® provides easy deployable, scalable local edge solutions. These allow for rejecting heat to whatever reuse scenario is present, like thermal energy storage, domestic water, city heating etc. If no recipient of heat is available, only a dry cooler is sufficient. Reducing or even eliminating the need for overhead installations like coolers for edge environments, providing a different perspective on datacentres. Geographic locations become easier to qualify and high quantities of micro installations can be easily deployed with minimal requirements. These datacentres will be integrated in existing district buildings or multifunctional district centres. A convenient location for the datacentre is a place where heat energy will be utilised throughout the whole year. Datacentres can also be placed as a separate building in residential and industrial areas. This creates the potential for a connected datacentre web consisting of mainly two types of datacentre environments. Large facilities (Core Datacentres) which are positioned on the edge of urban areas or even farther away and micro facilities (Edge Nodes) which are focused on optimising the large network infrastructure and are all interconnected with each other and with all core datacentres.

The main purpose of the edge nodes is to reduce the overall network load and act as an outpost for IoT applications, content caching and high bandwidth cloud applications. The main function of the core datacentres is to ensure continuity and availability of data by acting as data hubs and high capacity environments.

This is an excerpt of an Asperitas whitepaper. This whitepaper was originally published in The DCA Journal in June 2017: The Datacentre of the Future, authored by Rolf Brink, CEO and founder of Asperitas. http://asperitas.com/resource/immersed-computing-by-asperitas/

With recent reports outlining how blockchain is set to make a significant impact in both the environmental and pharamaceutical and lifesciences industry sectors, Digitalisation World sought a range of opinions as to how blockchain will develop outside of its cryptocurrency and gaming sweetspots.