By now I suspect all our DW readers know my views on the hype versus the reality of GDPR. Although it might be foolish to write off the compliance regulation as simply another Y2K, there’s no doubt that the sheer volume of noise surrounding the new EU legislation is not in any way justified.

Anyhow, let’s move on to more positive topics – things that businesses can do, as opposed to those that, apparently, they no longer can! This issue of DW has a great selection of articles covering a wide range of topics, many of which, I’m sure, are set to have (and may well already be having) a significant impact on the business world, and will be doing so longer after GDPR has been forgotten about.

The longer I work in IT, the more and more I’m struck how the pace of change just keeps on increasing. Once upon a time as with, say, virtualisation (the one that VMware spearheaded, not the one that the experts will tell you existed when Noah was a boy playing with his mainframe computer), there was a long period of gestation (several years) as the IT supply chain, from OEMs through to end users, familiarised itself with the technology, and then came the very cautious implementation phase – a few brave, early adopters followed by more and more companies. Virtualisation matured over possibly as long as a 10 year cycle.

Fast forward and IoT, containers, DevOps, Artificial Intelligence, 5G, Artificial Reality are no sooner talked about than products and solutions are out there and end users are using this stuff for real, and not just in a test environment. More and more businesses are understanding that they have to move fast just to stay still, as the disruptors force the pace.

As for the next game changer? Well, it may not even have been thought about right now, but I’ll wager that end users will be using it within the next 12-18 months!

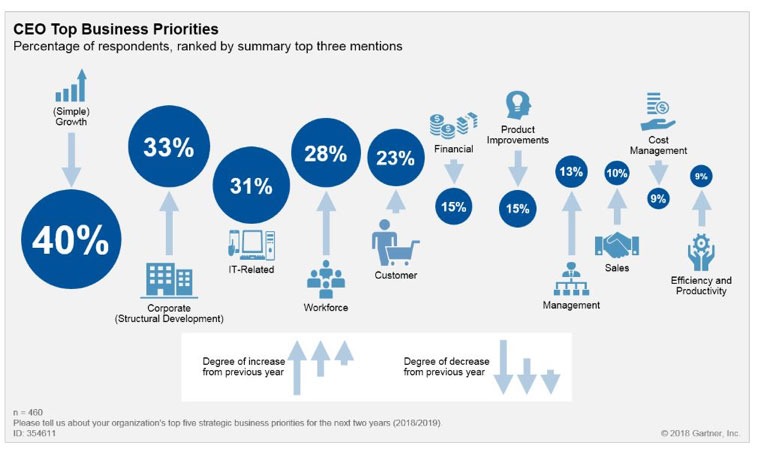

Growth, Corporate Strategies, IT and Workforce Issues Continue to Top CEOs' Priorities in 2018

Growth tops the list of CEO business priorities in 2018 and 2019, according to a recent survey of CEOs and senior executives by Gartner, Inc. However, the survey found that as simple, implemental growth becomes harder to achieve, CEOs are concentrating on changing and upgrading the structure of their companies, including a deeper understanding of digital business.

"Although growth remains the CEO's biggest priority, there was a significant fall in simple mentions of it this year, from 58 percent in 2017 to just 40 percent in 2018. This does not mean CEOs are less focused on growth, instead it shows that they are shifting perspective on how to obtain it," said Mark Raskino, vice president and Gartner Fellow. "The 'corporate' category, which includes actions such as new strategy, corporate partnerships and mergers and acquisitions, has risen significantly to become the second-biggest priority."

The Gartner 2018 CEO and Senior Business Executive Survey of 460 CEO and senior business executives in the fourth quarter of 2017 examined their business issues, as well as some areas of technology agenda impact. In total, 460 business leaders in organizations with more than $50 million in annual revenue were qualified and surveyed.

IT remains a high priority coming in at the third position, and CEOs mention digital transformation, in particular (see Figure 1). Workforce has risen rapidly this year to become the fourth-biggest priority, up from seventh in 2017. The number of CEOs mentioning workforce in their top three priorities rose from 16 percent to 28 percent. When asked about the most significant internal constraints to growth, employee and talent issues were at the top. CEOs said a lack of talent and workforce capability is the biggest inhibitor of digital business progress.

Figure 1: CEO Top Business Priorities for 2018 and 2019

Source: Gartner (May 2018)

CEOs recognize the need for cultural change

Culture change is a key aspect of digital transformation. The 2018 Gartner CIO Survey found CIOs agreed it was a very high-priority concern, but only 37 percent of CEOs said a significant or deep culture change is needed by 2020. However, when companies that have a digital initiative underway are compared with those that don't, the proportion in need of culture change rose to 42 percent.

"These survey results show that if a company has a digital initiative, then the recognized need for culture change is higher," said Mr. Raskino. "The most important types of cultural change that CEOs intend to make include making the culture more proactive, collaborative, innovative, empowered and customer-centric. They also highly rate a move to a more digital and tech-centric culture."

Digital business matters to CEOs

Survey respondents were asked whether they have a management initiative or transformation program to make their business more digital. The majority (62 percent) said they did. Of those organizations, 54 percent said that their digital business objective is transformational while 46 percent said the objective of the initiative is optimization.

In the background, CEOs' use of the word "digital" has been steadily rising. When asked to describe their top five business priorities, the number of respondents mentioning the word digital at least once has risen from 2.1 percent in the 2012 survey to 13.4 percent in 2018. This positive attitude toward digital business is backed up by CEOs' continuing intent to invest in IT. Sixty-one percent of respondents intend to increase spending on IT in 2018, while 32 percent plan to make no changes to spending and only seven percent foresee spending cuts.

More CEOs see their companies as innovation pioneers

The 2018 CEO survey showed that the percentage of respondents who think their company is an innovation pioneer has reached a high of 41 percent (up from 27 percent in 2013), with fast followers not far behind at 37 percent.

"CIOs should leverage this bullish sentiment by encouraging their business leaders into making "no way back" commitments to digital business change," said Mr. Raskino. "However, superficial digital change can be a dangerous form of self-deceit. The CEO's commitment must be grounded in deep fundamentals, such as genuine customer value, a real business model concept and disciplined economics."

Worldwide revenues for IT Services and Business Services totaled $502 billion in the second half of 2017 (2H17), an increase of 3.6% year over year (in constant currency), according to the International Data Corporation (IDC) Worldwide Semiannual Services Tracker.

For the full year 2017, worldwide services revenues came to just shy of the $1 trillion mark (IDC expects 2018 revenues to cross this threshold). Year-over-year growth was around 4%, which slightly outpaced the worldwide GDP growth rate. The above-GDP-growth reflects stronger business confidence bolstered by a brighter economic outlook, a shared sense of urgency for large-scale digital transformation, and, at least in certain pockets and segments, new digital services beginning to offset the commoditization of traditional services.

Looking at different services markets, project-oriented revenues continued to outpace outsourcing and support & training, mainly due to organizations freeing up pent-up discretionary spending from earlier years and feeling the need to "digitize" their organizations via large scale projects. Specifically, project-oriented markets grew 4.6% year over year to $186 billion in 2H17 and 5% to $366 billion for the entire year. Most of the above-the-market growth came from business consulting: its revenue grew by almost 7.8% in 2H17 and 8.2% for the entire year to $115 billion. In large digital transformation projects, high-touch business consultants continue to extract more value than mere IT resources do. Most major management consulting firms posted strong earnings in 2017.

IT-related project services, namely custom application development (CAD), IT consulting (ITC), and systems integration (SI), still make up the bulk (more than two thirds) of the overall project-oriented market. While slower than business consulting, these three markets showed significant improvement over the previous year: CAD, ITC, and SI combined grew by 3.7% year over year to $251 billion for the full year 2017. IDC believes that some 2015 and 2016 projects were either pushed out to or only started ramping up in 2017, which helped to drive up spending in 2H17. This coincides with the strong rebound on the software side as IT project-related services are largely application driven. Because large digital projects not only drive up "new services" but also pull in "traditional services," IDC believes that the actual volume of IT project services grew even faster in 2017 but was offset somewhat by lower pricing.

"As customers look to digital transformation initiatives to stay relevant in the new economy, vendors face both opportunities and challenges," said Xiao-Fei Zhang, program director, Global Services Markets and Trends. "While automation and new cloud delivery models reduce overall price, new digital services will require clients to spend more time and resources to modernize their existing IT environment,"

In outsourcing, revenues grew by only 3.3% year over year to $238 million in 2H17. Application-related managed services revenues (hosted and on-premise application management) outpaced the general market significantly – growing more than 6% in 2H17 and 5.8% for the entire year. Buyers have leveraged automation and cloud delivery to dramatically reduce the cost to operate applications, for example, infusing artificial intelligence into application life-cycle activities to drive better predictive maintenance and application portfolio management. However, in their continuing drive for digital transformation, organizations are increasingly relying on external services providers to navigate complex technical environments and supply talent with new skills (cloud, analytics, machine learning, etc.). Digital transformation also requires organizations to standardize and modernize their existing application assets. Therefore, IDC forecasts application outsourcing markets to continue outpacing other outsourcing markets in the coming years.

On the infrastructure side, while hosting infrastructure services revenue grew by 4.9% in 2H17, positively impacted by cloud adoption, IT Outsourcing (ITO), a larger market, declined by 2%. Combined, the two markets were essentially flat. IDC believes that while overall infrastructure demand remains robust, the ITO market is negatively impacted the most by "cloud cannibalization" across all regions: cloud, particularly public cloud, reduces price far greater than new demand can make up for. For example, IDC estimates that, by 2021, almost one third of ITO services revenue will be cloud related.

On a geographic basis, larger mature markets posted slower growth in 2H17. The United States, the largest services market, grew by 3.5%, in line with the market rate, while Western Europe, the second largest market, only managed 1% growth. This was offset partially by stronger growth in Japan. In the United States, overall economic prospects remain sanguine and corporate spending robust, especially in funding new projects to acquire new capabilities and tools. At the same time, U.S. corporate buyers are putting tremendous pressure on vendors to reduce prices for commodity services, such as infrastructure outsourcing. In Western Europe, services revenues are beginning to show signs of recovery: because of 1H17's stronger growth, 2017 revenue overall grew by 2.8%, which is in line with 2016. IDC expects Western European services revenues to stabilize further as major economies begin to recover. However, long-term growth prospects for the region are weaker than North America. IDC forecasts the region to grow below 3% annually in the coming years.

In emerging markets, Latin America, Central & Eastern Europe (CEE), and Middle East & Africa (MEA) each grew around 7% in 2H17. In Latin America, most major economies are turning the corner. IDC is seeing healthier IT spending in the region with strong deals in the pipeline from the government sector. CEE and MEA are relatively small markets where a few large IT initiatives can easily drive up growth. Organizations there also have fewer legacy IT assets, which makes adopting digital services much easier.

In Asia/Pacific (excluding Japan) (APeJ), Australia's growth remained at 3.9% in 2H17 with some of the large IT initiatives in 2015 and 2016 ramping up revenues in 2017 and onward. But Australia overall is a large and mature market (second largest in the region after China), dominated by large clients in traditional industries and the procurement focus is primarily on cost cutting and improving operational efficiency. IDC forecasts the country to grow much slower than other APeJ markets (by less than half). Both China and India grew by around 10% in 2H17 due to strong economic growth and large initiatives to invest in new technologies.

| Global Regional Services 2H17 Revenue and Year-Over-Year Growth (revenues in $US billions) | ||

| Global Region | 2H17 Revenue | 2H17/2H16 Growth |

| Americas | $258.3 | 3.9% |

| Asia/Pacific | $85.3 | 6.2% |

| EMEA | $158.5 | 2.0% |

| Total | $502.1 | 3.6% |

| Source: IDC Worldwide Semiannual Services Tracker 2H 2017 | ||

"The demand for a wide range of digital solutions continues to drive the steady growth in the services markets, but it is still cloud-related services that are having the biggest revenue impact." said Lisa Nagamine, research manager with IDC's Worldwide Semiannual Services Tracker.

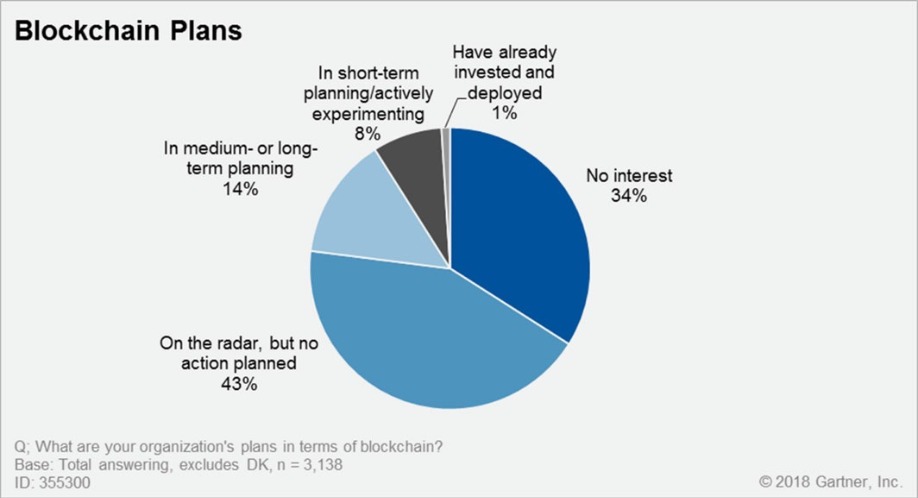

Organizations Should Not Overestimate the Short-Term Benefits of Blockchain

Only 1 percent of CIOs indicated any kind of blockchain adoption within their organizations, and only 8 percent of CIOs were in short-term planning or active experimentation with blockchain, according to Gartner's 2018 CIO Survey. Furthermore, 77 percent of CIOs surveyed said their organization has no interest in the technology and/or no action planned to investigate or develop it.

"This year’s Gartner CIO Survey provides factual evidence about the massively hyped state of blockchain adoption and deployment (see Figure 1)," said David Furlonger, vice president and Gartner Fellow. "It is critical to understand what blockchain is and what it is capable of today, compared to how it will transform companies, industries and society tomorrow."

Mr. Furlonger added that rushing into blockchain deployments could lead organizations to significant problems of failed innovation, wasted investment, rash decisions and even rejection of a game-changing technology.

Figure 1 – Blockchain Adoption, Worldwide

Source: Gartner (May 2018)

The rocky road to blockchain

Among 293 CIOs of organizations that are in short-term planning or have already invested in blockchain initiatives, 23 percent of CIOs said that blockchain requires the most new skills to implement of any technology area, while 18 percent said that blockchain skills are the most difficult to find. A further 14 percent indicated that blockchain requires the greatest change in the culture of the IT department, and 13 percent believed that the structure of the IT department had to change in order to implement blockchain.

"The challenge for CIOs is not just finding and retaining qualified engineers, but finding enough to accommodate growth in resources as blockchain developments grow," said Mr. Furlonger. "Qualified engineers may be cautious due to the historically libertarian and maverick nature of the blockchain developer community."

CIOs also recognized that blockchain implementation will change the operating and business model of the organizations, and they indicated a challenge in being ready and able to accommodate this requirement. "Blockchain technology requires understanding of, at a fundamental level, aspects of security, law, value exchange, decentralized governance, process and commercial architectures," said Mr. Furlonger. "It therefore implies that traditional lines of business and organization silos can no longer operate under their historical structures."

Financial services and insurance companies lead the way

From an industry perspective, CIOs from telecom, insurance and financial services indicated being more actively involved in blockchain planning and experimentation than CIOs from other industries.

While financial services and insurance companies are at the forefront of this activity, the transportation, government and utilities sectors are now becoming more engaged due to the heavy focus on process efficiency, supply chain and logistics opportunities. For telecom companies, interest lies in a desire to "own the infrastructure wires" and grasp the consumer payment opportunity.

"Blockchain continues its journey on the Gartner Hype Cycle at the Peak of Inflated Expectations. How quickly different industry players navigate the Trough of Disillusionment will be as much about the psychological acceptance of the innovations that blockchain brings as the technology itself," said Mr. Furlonger.

Business, governance and operating models, and designed and implemented predigital business will take time to re-engineer. This is because of the ramifications blockchain has concerning control and economics. "While many industries indicate an initial interest in blockchain initiatives, it remains to be seen whether they will accept decentralized, distributed, tokenized networks, or stall as they try to introduce blockchain into legacy value streams and systems," Mr. Furlonger concluded.

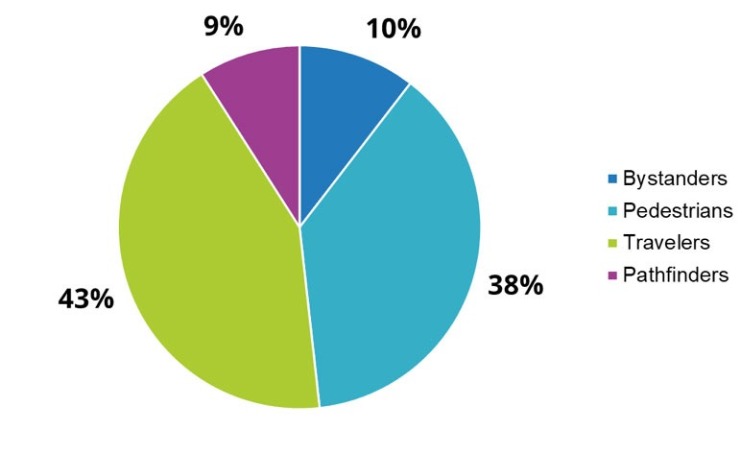

A recent survey by International Data Corporation (IDC) found that only a small minority of European organizations have taken sufficient steps toward establishing a fully fledged multicloud strategy.

"Virtually all European enterprises will soon use multiple cloud services. The smart ones are already actively planning for those services to be benchmarked, price-compared, and selected against each other based on the workload need," said Giorgio Nebuloni, research director, European Multicloud Infrastructure at IDC. "To get there, a central point of control based on software and potentially services is needed, as are strategic approaches to skillsets, processes, and datacenter infrastructure."

Successful multicloud strategies involve tackling business decisions from a spectrum of organizational standpoints. Businesses need to identify which workloads are fit for public cloud and which workloads are better run in a private cloud. An ever-changing cloud landscape means IT buyers also need to constantly evaluate the cloud vendor landscape.

IDC's multicloud survey found varying levels of multicloud understanding and strategy formation among European organizations. Key findings from the survey include:

Multicloud Readiness Among European Organizations in 2018

From Bystanders with basic cloud strategies to Pathfinders with fully fledged multicloud: What proportion of European organizations are multicloud ready?

Source: IDC European Multicloud Infrastructure Survey (March 2018), n = 193, 651

"While the perception of multicloud infrastructure as an end goal certainly resonates with European organizations, there remains uncertainty over what a multicloud strategy looks like and how this strategy should be disseminated within an organization," said Michael Ceroici, research analyst, European Multicloud Infrastructure, IDC. "Elements from infrastructure technology, aligning cloud vision between different business lines, and internal cloud expertise all play a part in facilitating successful multicloud endeavors."

With around 1.8 million industrial robots, the number of robots has reached a new record in factories around the world.

Workers around the world rate the fact that "colleague robots" can take over work that is detrimental to health or handle hazardous materials positively (64 percent on average). However, employees are worried about how their own training can keep up with the pace of the working world 4.0. These are the findings of the automatica Trend Index 2018. 7,000 employees in the USA, Asia and Europe in a representative survey of the population were interviewed by a market research institute on behalf of automatica, the world's leading trade fair for robotics and automation.

When it comes to their own country, only about one in four employees is convinced that training and development already plays a key role in the workplace of the future. This new collaboration with robots is regarded by the majority of all seven countries (average 68 percent) as an opportunity to master higher-skilled work. Particularly in China (86 percent) and in the USA (74 percent), people expect that robotics automation will provide added impetus to further their vocational training. The number of higher-skilled and better paid jobs will rise in the future with the new human-robot teams – according to about one in two survey respondents in Germany, France, Italy, the UK and Japan. In China and the United States, as many as 80 percent of workers presume this will be the case.

The maturity level in training and development

Based on school grades, the maturity level in training and development for the digital workplace of the future has so far fallen far short of employee expectations: with a grade of good or very good, the current offering of one's own employer is not even rated by one in four respondents (on average 23 percent).

Robotics and automation are very popular

The companies can count on a positive basic attitude among their employees regarding robotics and automation. In the working world of the future, human-robot teams will improve manufacturing by combining human talents with the strengths of robotics - some 70 percent believe. When people and machines work hand-in-hand without a safety fence, people need talents such as judgement and fine motor skills. The robot can score with power and precision.

64 percent of all workers from the seven countries want to use artificial intelligence (AI) for human-machine collaboration. 73 percent assume that AI makes it easier for people to assign the machine new tasks - for example, via voice command or touchpad.

automatica 2018 shows rapid development to work 4.0

"As the survey shows, employees want more consistent commitments from politicians, industry and science as regards training and development for work 4.0," says Falk Senger, managing director of technology fairs at Messe München. "At the same time, the technological development of intelligent automation and robotics is advancing at a tremendous pace. All aspects of this development will be showcased at the automatica 2018 in Munich from 19 to 22 June. "

The results of a large-scale study of more than 36,000 consumers in 18 countries by Verint® Systems Inc., and carried out in partnership with Opinium Research LLC, reveals that more than 70% of consumers stated there has been an increased use of automation technology—such as artificial intelligence (AI) and robots—in their workplaces.

The study also showed that consumers on the whole are becoming more comfortable with automation in the workplace. Consumers in Mexico (85%), Brazil (84%) and India (83%) reported the highest levels of automation in the workplace. Nearly 7 out of 10 (69%) U.S. consumers said processes are being automated by technology, while those in Sweden and Japan (both at 55%), and Germany, Denmark and the UK (all at 61%) reported the lowest levels of automation.

Approximately half of respondents (49%) said that technology, such as AI and robots, help them do their job more effectively. Those in countries with the highest adoption of technology were more disposed to view automation in a positive light, with 76% of Indians, 70% of Brazilians and 65% of Mexicans believing that it helps them do their job more effectively. Brits (25%), Swedes (29%), Belgians (32%) and Canadians (36%) had the lowest response to the role of technology in the workplace. In the U.S., 43% of respondents said technology helps them perform their jobs more effectively.

This fresh research raises questions about what the workplace of the future may look like and suggests the line between the workplace and home will continue to blur. More than two-thirds (67%) of respondents said they expect workplaces to be more flexible to suit employee preferences as technology improves, and 62% said technology is already making it easier for them to work from home or have more flexible work hours.

Trends expert James Woudhuysen, visiting professor of forecasting and innovation at London South Bank University, shares that “In both developed and developing economies, customer service forms a rising share of employment and GDP. Though organizations will automate many of today’s customer service tasks, there will be plenty of new jobs in this sector. These jobs will just be different. They’ll be more about what machines can’t do. In 2030, for example, direct human interaction in customer service will need to combine wisdom with the latest soundings of the public mood. In this way, companies can make the right call—a feat that technology, automation and systems won’t, by themselves, ever be able to achieve.”

“Technology continues to shape the way people engage with organizations, both as customers and employees. However, organizations must strike the right balance between technology and automation on one hand and human interactions on the other,” says Verint’s Ryan Hollenbeck, senior vice president of global marketing and executive sponsor of the Verint Customer Experience Program. “Automation technology, in particular, opens new possibilities for how people work, as well as how they meet service level requirements.”

In fact, automation can be seen as a benefit in its ability to handle mundane, repetitive tasks and processes, and manage them in a timely, compliant way. It frees employees for more meaningful activities and work in more flexible ways, while empowering consumers to self-serve through the channel, place and time of their choosing. For effective rollout strategies and adoption techniques, Hollenbeck notes that “The key will be for organizations to engage employees throughout the implementation process of automation and robotics initiatives, explaining and demonstrating the value of technology, alongside human intelligence and emotion.”

This year’s DCS Awards – as ever, designed to reward the product designers, manufacturers, suppliers and providers operating in the data centre arena – took place at the, by now, familiar, prestigious Grange St Paul’s Hotel in the City of London. Host Paul Trowbridge coordinated a spectacular evening of excellent food, a prize draw (with champagne and a team of four at the DCA Golf Day up for grabs), excellent comedian Angela Barnes, music courtesy of Ruby & the Rhythms and, of course, the reason almost 300 data centre industry professionals attended – the awards themselves. Here we salute the winners.

The next Data Centre Transformation event, organised by Angel Business Communications in association with DataCentre Solutions, the Data Centre Alliance, The University of Leeds and RISE SICS North, takes place on 3 July 2018 at the University of Manchester.

The programme is nearly finalised (full details via the website link at the end of the article), with some some top class speakers and chairpersons lined up to deliver what is probably 2018’s best opportunity to get up to speed with what’s heading to a data centre near you in the very near future!

For the 2018 event, we’re taking our title literally, so the focus is on each of the three strands of our title: DATA, CENTRE and TRANSFORMATION.

This expanded and innovative conference programme recognises that data centres do not exist in splendid isolation, but are the foundation of today’s dynamic, digital world. Agility, mobility, scalability, reliability and accessibility are the key drivers for the enterprise as it seeks to ensure the ultimate customer experience. Data centres have a vital role to play in ensuring that the applications and support organisations can connect to their customers seamlessly – wherever and whenever they are being accessed. And that’s why our 2018 Data Centre Transformation Manchester will focus on the constantly changing demands being made on the data centre in this new, digital age, concentrating on how the data centre is evolving to meet these challenges.

We’re delighted to announce that GCHQ have confirmed that they will be providing a keynote speaker on their specialist subject – security! Has IT security ever been so topical? What a great opportunity to hear leading cybersecurity experts give their thoughts on the issues surrounding cybersecurity in and around the data centre.

We’re equally delighted to reveal that key personnel from Equinix, including MD Russell Poole, will be delivering the Hybrid Data Centre keynote addresss. If Adam knows about cybersecurity, it’s fair to say that Equinix are no strangers to the data centre ecosystem, where the hybrid approach is gaining traction in so many different ways.

Completing the keynote line-up will be John Laban, European Representative of the Open Compute Project Foundation.

Alongside the keynote presentations, the one-day DCT event will include:

A DATA strand that features two workshops - one on Digital Business, chaired by Prof Ian Bitterlin of Critical Facilities and one on Digital Skills, chaired by Steve Bowes Phipps of PTS Consulting.

Digital transformation is the driving force in the business world right now, and the impact that this is having on the IT function and, crucially, the data centre infrastructure of organisations is something that is, perhaps, not as yet fully understood. No doubt this is in part due to the lack of digital skills available in the workplace right now – a problem which, unless addressed, urgently, will only continue to grow. As for security, hardly a day goes by without news headlines focusing on the latest, high profile data breach at some public or private organisation. Digital business offers many benefits, but it also introduces further potential security issues that need to be addressed. The Digital Business, Digital Skills and Security sessions at DCT will discuss the many issues that need to be addressed, and, hopefully, come up with some helpful solutions.

The CENTRES track features two workshops on Energy, chaired by James Kirkwood of Ekkosense and Hybrid DC, chaired by Mark Seymour of Future Facilities.

Energy supply and cost remains a major part of the data centre management piece, and this track will look at the technology innovations that are impacting on the supply and use of energy within the data centre. Fewer and fewer organisations have a pure-play in-house data centre real estate; most now make use of some kind of colo and/or managed services offerings. Further, the idea of one or a handful of centralised data centres is now being challenged by the emergence of edge computing. So, in-house and third party data centre facilities, combined with a mixture of centralised, regional and very local sites, makes for a very new and challenging data centre landscape. As for connectivity – feeds and speeds remain critical for many business applications, and it’s good to know what’s around the corner in this fast moving world of networks, telecoms and the like.

The TRANSFORMATION strand features workshops on Automation (AI/IoT), chaired by Vanessa Moffat of Agile Momentum and The Connected World. together with a Keynote on Open Compute from John Laban, the European representative of the Open Compute Project Foundation.

Automation in all its various guises is becoming an increasingly important part of the digital business world. In terms of the data centre, the challenges are twofold. How can these automation technologies best be used to improve the design, day to day running, overall management and maintenance of data centre facilities? And how will data centres need to evolve to cope with the increasingly large volumes of applications, data and new-style IT equipment that provide the foundations for this real-time, automated world? Flexibility, agility, security, reliability, resilience, speeds and feeds – they’ve never been so important!

Delegates select two 70 minute workshops to attend and take part in an interactive discussion led by an Industry Chair and featuring panellists - specialists and protagonists - in the subject. The workshops will ensure that delegates not only earn valuable CPD accreditation points but also have an open forum to speak with their peers, academics and leading vendors and suppliers.

There is also a Technical track where our sponsors will present 15 minute technical sessions on a range of subjects. Keynote presentations in each of the themes together with plenty of networking time to catch up with old friends and make new contacts make this a must-do day in the DC event calendar. Visit the website for more information on this dynamic academic and industry collaborative information exchange.

OpenStack has matured and become the open infrastructure platform of choice across the business world, highlighted by major deployments from leading organizations such as Verizon, BBVA, and NASA Jet Propulsion Laboratory, as well as continued growth in the contributing community. But what’s next?

By Radhesh Balakrishnan, general manager, OpenStack, Red Hat.

Whilst it’s nice to see the success of OpenStack in the enterprise, the community cannot rest on its proverbial laurels. Containers are a key target, which the OpenStack community and ecosystem need to focus on next.

An application packaging technology, that allows for greater workload flexibility and portability, support for containerized applications will be key to OpenStack moving forward, especially as enterprise interest intersects both Linux containers and OpenStack.

The success of containers should not be ignored. A study undertaken by 451 Research estimates containers will be a $2.7 billion market by 2020, playing a prominent role in cloud technologies going forward. They are already being used to help solve real business problems and drive business value across a variety of industries. We see four main drivers for businesses deploying containers. To run apps better; to build apps better (particularly making use of microservices); to enhance infrastructure and take advantage of hybrid cloud; and to enact large scale business transformation.

At some point, containers will be universally portable but this isn’t the case yet. For containers to be truly portable, there needs to be an integrated application delivery platform built on open standards and providing a consistent execution across different environments. Containers rely on the host OS and its services for compute, network, storage and management, across physical hardware, hypervisors, private clouds and public clouds. The ecosystem is the key here, there needs to be industry standards for image format, runtime and distribution in order for universal portability to become possible.

This need is recognised by the industry and relevant communities who formed entities to define and evolve these standards, such as the Open Container Initiative and Cloud Native Computing Foundation.

OpenStack services -- like Neutron for networking and Cinder for block storage -- can already be abstracted and made available via containers and Kubernetes- (container orchestration) based platforms. Container technology enables the use of underlying resources to deploy, upgrade and scale the OpenStack control plane effectively. While this is important, as container-based infrastructure grows, it’s not as impactful critical as the second need: running containers themselves on the OpenStack “tenant” side.

While containerized applications are, at their core, applications, they have a different set of needs than the traditional, typical virtual machine-based cloud applications that one would usually see on an OpenStack-based cloud.

To make OpenStack more container friendly, we need to better expose the underlying plumbing of OpenStack, the networking, storage and management pieces comprising the framework, to container technologies, so that the value of the healthy OpenStack ecosystem accrues without any “tax”. Also, OpenStack services, such as Keystone for authentication or Cinder Block Storage, to provide persistent storage for stateful applications, can be leveraged by container based applications without the need to reinvent the wheel. We’re already seeing the community rallying around this, projects like Kuryr but these efforts need to be expanded and delivered as supported products in the future.

As history of computing has shown, “and” is the most powerful value proposition to customers to offer investment protection and business continuity. By focusing on bringing the worlds of containers and OpenStack together, the community can help get us to a unifying open infrastructure management layer that spans physical, virtual and containers thereby getting us closer to fulfilling the vision of an open hybrid cloud.

Stories about various industries and companies going digital cram the headlines. For example, airlines are readying for the digital shift to tackle increasing air traffic; meanwhile, Marks & Spencer has hired a principal technology partner to ‘drive digital-first.’ At the centre of all this is the need to boost productivity.

By Nick Pike, VP UK and Ireland, OutSystems.

Many companies still use inefficient manual processes instead of automation, however. Building apps to eliminate paper and streamline operations provides the obvious advantage of saving time, but it can also uncover new insights for continuous improvement and competitive advantage. But after the apps are developed, the process of moving them to operations can encounter a bottleneck in the form of another set of inefficient practices. That’s where DevOps enters the picture.

Rather than being a skill, a position you can hire in your IT department, or a specific tool you can purchase, DevOps is a philosophy. The goals are to increase the value software brings to customers and to enable organisations to react faster to change in the business.

Here are nine steps for developing a DevOps mindset in your organisation:

1. Define and validate the expected impact of development on IT before you start.

2. Automate infrastructure provisioning to boost the speed of your development and testing.

3. Mimic production as closely as possible in all non-production environments.

4. Anticipate failure by testing scenarios in a self-healing infrastructure.

5. Continuously integrate your code during app development.

6. Register your configurations in a shared central repository and track every application change.

7. Orchestrate deployment with automation that defines all steps of the process for each application.

8. Measure app health and performance and the impact of your apps on your business and customers once they reach production.

9. Make sure feedback you collect is shared with all stakeholders.

Let’s take a look at these in closer detail and see how they build on each other to accelerate transformation. Impact analysis is a handy tool to get you started; it validates if a deployment can be completed without affecting other applications running in the target environment. This process determines global dependencies and makes sure that nothing breaks when the application goes live.

Then, infrastructure automation brings agility to both development and operations. By describing your infrastructure as a set of scripts, you can replicate environments with significantly fewer errors. Self-healing capabilities automatically correct problems or inform developers of any corrections they must handle. It automatically validates changes as you go.

In the testing phase, depending on complexity and how critical each project is, the delivery team should apply different types of testing. We recommend unit testing or using behaviour-driven development and test-driven development practices.

Then, of course, you will want to measure your performance and the impact on customers and share findings with stakeholders. If all has gone well, the results can be remarkable. For example, BlueZest, a technology-based specialised property investment funder, released its digital mortgage underwriting application in October 2017. Now, BlueZest is citing speeds as fast as 15 minutes for initial loan quotes and 30 minutes for confirmed offers.

Ultimately, DevOps is all about bridging the gap between development and operations. Low-code development platforms offer capabilities that enable these nine steps so your organisation can cross that chasm.

Examples include a unified application lifecycle management console, embedded version control and build server with continuous integration, built-in monitoring and analytics, high-availability configuration, and centralised configuration management.

Combining the speed of low-code visual development with the agility of DevOps can greatly accelerate digital transformation, thereby enabling organisations to reap the efficiency, productivity, and competitive benefits quickly.

More and more businesses are realising the incredible value that the Internet of Things (IoT) can add to their operations.

By Abel Smit, IoT Consulting and Customer Success Director, Tech Data Europe.

This opportunity stretches across multiple industries including manufacturing, insurance, transport, the provision of government and public services, utilities, healthcare – the list goes on. Chances are, whatever your business does there’s an opportunity for IoT to transform it, and there’s an opportunity for the channel to help guide your business through this process.

IoT in practice.

The potential uses of IoT in everyday life are wide ranging. For example, intelligent systems can greatly improve the management of our transportation systems, automating simple and repetitive tasks to allow more time to be spent on developing and improving services. Cities across Europe have invested in connected signalling solutions to better promote the flow of traffic, specifically for buses and bikes. Public transport vehicles can communicate their position, number of passengers and any delays they are experiencing with smart traffic signals to request priority signalling, saving valuable time on journeys for people using environmentally responsible means.

IoT has also seen widespread adoption in manufacturing, with IDC forecasting that this will be where the most investment will be made in 2018. The primary use of IoT in manufacturing is for predictive maintenance, where IoT sensors monitor various inputs and outputs to track the wear-and-tear of machinery. This allows manufacturers to repair or replace parts before they break, avoiding critical failure, which causes unplanned disruptions to production and incurs significant costs for manufacturers. The data from IoT sensors is also often used to track performance, allowing manufacturers to understand what is normal for that piece of machinery and create an automated system that will halt production if an abnormal circumstance arises, preventing mistakes and the waste of valuable materials.

IoT is also playing a critical role in digital twinning, a technique that has practical applications in a range of industries, from predictive maintenance to design and architecture, and even managing major logistics operations. Digital twinning is the process of taking a physical asset and creating an exact copy in the digital world, informed by data collected by IoT sensors. Where previously design components had no intelligence, digital twinning allows you to assess how these components will react to different environmental conditions without impacting the physical asset. The clear advantage is that experimenting in the digital world is much less destructive than doing so in the physical, with the capability to do so at enormous scale.

These models can also be monitored using real-time data, allowing for rapid diagnostics and prognostics of assets. This provides actionable insights to engineers and maintenance staff and prevents mistakes and breakdowns from happening, saving costs on materials and avoiding the costly business interruption that breakdowns and unplanned maintenance entails. The application of IoT in this regard has proven particularly useful in instances where the machinery being monitored is difficult to reach, such as on a wind turbine or oil rig.

The challenges of realising this potential.

However, deploying and securing all of these sensors can represent a significant challenge. Firstly, if you have several devices from multiple vendors all using different communication standards, this will present a number of siloed networks that need to be made to understand each other. Also, securing several networks is monumentally more difficult than securing just one. Working with the right channel partner is crucial to alleviating these problems. The channel has the right expertise to consolidate disparate vendor technologies and manage the difficulties and vulnerabilities that present themselves in any complex IoT solution. With all of the necessary components in an IoT solution, another major obstacle to fully realising the potential of the IoT is addressing the current skills gap. Identifying productive opportunities to leverage IoT, creating and securing networks, and capitalising on the data the IoT produces, requires expert knowledge and insight. Businesses should spend time to understand what core skills they need. Do they need knowledge of gateways and sensors for producing data? Is expertise in networking security and the cloud necessary to ensure information produced gets to the data centre?

The necessary skills for the IoT world.

Typically, data is being augmented with other data in the organisation – for example, in a smart building, data related to temperature and lighting will be compared against the number of people in different rooms, desired energy performance and the day of the week – which will require knowledge of databases, software integration and even middleware. Naturally, data that can’t be understood is useless, so you’ll also require knowledge of creating dashboards that enable the actionable insight you created your IoT system for in the first place.

Having all of these skills under one roof is an unlikely luxury that most businesses cannot afford and ought to try not to. Fortunately, the channel offers businesses a wealth of partners with complementary skills that can fill gaps in expertise, covering a broad spectrum of industries and markets, poised to augment customers’ knowledge and help leverage IoT technology to transform business processes and become a market leader.

Paired with visionary thinking, IoT has the potential to, and almost certainly will, reinvent numerous industries. IoT presents businesses with an enormous opportunity to improve productivity, reduce errors, drive to greater efficiencies and do so in a more environmentally sustainable manner. In turn, this opens up a wealth of possibilities for the channel to discover new and innovative ways to deliver IoT solutions to customers, and meet their growing technology demands. Ultimately, the allure of this potential is too great for anyone to ignore.

-g676of.jpg)

On the road to digital transformation, there are many challenges that organisations face in re-platforming the IT infrastructure. But, no matter what problem your organisation is facing, remember: you’re not alone.

By Pinakin Patel, Senior Director EMEA Solutions Engineering at MapR.

These once-in-a-generation overhauls are never easy. IT management must deal with legacy architecture that is unable to meet the high scale / low frequency requirements of emerging technologies, as well as the challenge the legacy “thinking” and skill set.

This multifaceted challenge must be overcome to capture the potential value of the emerging technologies like cloud computing, big data analytics and containers.

Cloud Computing

Developer productivity is one of the most compelling advantages of cloud computing: providing the ability to quickly spin up their own instances, provision the tools they need, with the added flexibility to scale up or down as required.

Data gravity – which refers to how heavy data becomes as it grows – and cloud neutrality – which prevents vendor lock-in – should be important considerations for cloud computing. These considerations are key to optimize TCO and agility in the long run. Point solutions look appealing at the start of a project and become a pain point later.

Big Data Analytics

The vast amount and variety of semi and unstructured data, such as that created by IoT devices, presents one of the biggest challenges in terms of big data. Legacy RDBMS is unable to aggregate, store and process this data efficiently, especially in high data volumes, which continue to grow at an unprecedented rate. Managing the immense pressure of dealing with the variety and volume of data, alongside expectations to deliver greater personalisation and improvements to the customer base, often results in the creation of a complex and fragile architecture with lots of components that is difficult to maintain.

The constant new releases in this highly dynamic environment also constantly add new challenges to managing the big data infrastructure: from the data storage concerns, which often leads to the tricky construction of data lakes that can collect and store data in its native formats, to the conundrum of finding systems capable of managing mega-data streams from disparate sources.

With the situation further aggravated by external factors, like the big data skills shortage, as well as increasingly arduous security and privacy concerns, managing and driving an effective ROI from big data efforts is no mean feat.

Containers

For those developers shifting between on-premise, private cloud and public cloud architecture, containers are ideal. Independent of the underlying infrastructure and operating system, they can be moved with minimal disruption.

Containers can be thought of as an operating system virtualisation where workloads share operating systems (OS) resources. Adoption has accelerated of late, with impressive acceptance. In fact, in a global study of 1,100 senior business and IT executives, 40 percent reported already using containers in production – half of those with mission-critical workloads. And just 13 percent have no plans to use containers this year.

Containers do what virtual machines (VMs) can’t do in a development environment, with the ability to be instantly launched or abandoned in real time. Unlike with VMs, in the container environment, there is no requirement for OS overhead. Containers are also fated to play a significant role in facilitating a seamless transfer of development from one platform or environment to another.

However, containers come with their own issues and challenges. Firstly, most containerised applications are currently stateless, which could create an issue for stateful applications. However, there are workarounds, such as leveraging a converged data platform, which can provide the reliable storage to support stateful applications.

Furthermore, as with many big data platforms, containers raise data security concerns. This is not unusual for relatively new technologies when deployed in critical areas, like application development. A simple example is a case in which two different containerised applications are launched on the same physical server with the applications leveraged on the local serve storage. Further efforts must be made to properly secure data. For example, if one of those applications were on a web server and the other on a financial application, an issue with regards to sensitive information could arise.

By adopting a persistent data store that has built-in security can minimise risk. Such a store should have a certified, pre-built container image with predefined permissions, secure authentication at the container level, security tickets, encryption and support configuration with Dockerfile scripts.

Make the journey together

Despite the multifaceted challenges that organisations will face on the road to digital transformation, fear can’t prevent the IT team from getting into these technologies.

Ensuring you’re well-educated on the state of these emerging technologies, the challenges that others have faced in implementing their solutions, as well as their tips and tricks in achieving an effective deployment will help ensure your own adoption is as smooth as possible.

Augmented reality (AR) is re-shaping our use of technology. , Consider how quickly we have moved from typing on PC keyboards, to the smartphone’s tap and swipe and on to simply using voice commands to ask Alexa or Siri to answer our questions and help us get things done. Now AR brings us to the age of holographic computing, providing a captivating, futuristic user interface alongside animojies, Pokémon and face filters.

Whereas textbook holography is generated by lasers, holographic computing is coming to us now through our the mobile devices in the palm of our hands. As a result, we are now witnessing a surge in the use of hologram-like 3D – and to Cook’s point, it will completely change how we interact with businesses and each other.

The evidence is everywhere. The release of Apple’s iOS11 puts AR into the hands of over 400 million consumers. The new iPhoneX is purposely designed to deliver enhanced AR experiences with 3D cameras and “Bionic” processors. Google, meanwhile recently launched the Poly platform for finding and distributing virtual and augmented reality objects, while Amazon released Sumerian to facilitate creation of realistic virtual environments in the cloud. We are also in the midst of an AR-native content creation movement, with a steady stream of AR features coming from Facebook, Snapchat, Instagram, and other tech players.

The instantly engaging user experiences of 3D are obviously attractive for gaming and entertainment, but are capable of so much more, particularly in the training and customer-experience sectors where the technology is already making substantial in-roads.

In training, holography is useful for virtual hands-on guidance to explain a process, complete a form or orient a user. It also can effectively simulate real-life scenarios such as sales interactions or emergencies.

Holographic computing interfaces add new dimensions to traditional instruction methods. AR enhancements can be overlaid for greater depth and variety in information presentation, such as floating text bubbles to provide detail about a particular physical object. They can generate chronological procedure-mapping for performing a task, or virtual arrows pointing to the correct button to push on a console.

There are countless opportunities for adding more digital information to almost anything within range of a phone camera. Why should staff travel to a classroom if an interactive, immersive 3D presentation can be launched on any desk, wall, or floor and “experienced” through the screen in the user’s hand? And unlike passively watching video, holographic interfaces add an extra experiential element to the training process. As a result, users can more readily contextualise what they are learning.

In customer experience, consumers are using AR and holographic computing for self-selection, self-service, and self-help. And it will not be long before the range of uses expands. IKEA’s AR app, for example, lets a customer point a phone at their dining room to see how a new table will look in the space. Taking this further, it should be possible to point the phone at the delivery box in order to be holographically guided through the assembly process when the table is delivered.

Holographic computing will also emerge as the preferred means for obtaining product information and interacting with service agents. Walk-throughs of hotel rooms and holiday destinations with a 3D virtual tour guide, travel planner or salesperson are also not too far away.

There are other appealing use cases, of course. And as adoption and implementation spread, there will be many instances where this new user interface is preferable and will quickly become standard.

Along with the Apples, Googles and Facebooks of this world, there are a number of new entrants to the AR arena. The sheer amount of money being thrown at speedy development shows that the ultimate nature of the holographic user interface is up for grabs. Will it remain phone-based or involve glasses? Will it shift to desktop or evolve beyond our current hardware, to be integrated into on-eye projection technology? Or will it be all of the above – who can say?

The one certainty is that significant brain-power is being invested by companies of all types in the development and application of this emerging technology. The increased dispersal of AR experiences in all their incarnations, combined with the mounting accessibility afforded by our smartphones, will drive mass-adoption and widespread affinity for the holographic interface.

The Internet of Things (IoT) is arguably one of the biggest technology revolutions to date, with the global IoT market set to grow to $457.29 billion by 2020, and the number of connected devices expected to hit 31 billion.

By Jason Kay, Chief Commercial Officer, IMS Evolve.

This impressive figure doesn’t come without warrant; a range of organisations across a variety of sectors are seeing the benefits of adopting IoT solutions, particularly within the cold food supply chain. However these benefits are only accessible if deployments can first make it past the Proof of Concept (PoC) stage, and to achieve that, organisations need to ensure they gain consensus for business-wide collaboration across all departments and also take a ‘business-first’ approach to guarantee the future success of their project.

Obstacles

IoT solutions are developed with the aim of improving business efficiency and increasing value for adopters, however without the correct application, the full potential of IoT is not yet being realised by many businesses. Organisations may have utilised an IoT deployment throughout their infrastructure, but if it’s lacking the correct focus, it can result in IoT projects failing to make it past the PoC stage; 60 per cent to be exact, as reported by the latest Cisco IoT deployment survey. This puts the growth rate of IoT at risk, as businesses could become hesitant to adopt this technology for all the wrong reasons.

In actual fact, the opportunities presented by IoT can be revolutionary for companies when utilised in the most suitable way for their respective business models, all that is required is a change in mindset towards where, and how, IoT solutions are deployed. The focus must not be on what an IoT solution can do, it should first be on what the business needs and where technology can be applied to help. This will ensure that IoT solutions are primarily focussed on solving specific, pre-identified issues, and guarantee a Return on Investment (ROI). Furthermore, through collaboration at all levels between the organisation and technology vendor, the solution can be applied effectively from the beginning, to work towards a successful and scalable IoT project and leverage the value released at the beginning to invest in further digital innovation.

With this change in perspective, organisations can approach IoT deployments with confidence, knowing IoT can provide invaluable benefits, including streamlined efficiency and greater insight into data. This emphasis on issue-specific solutions is crucial to ensure the growth of the IoT market continues as its predicted rate.

Transformative applications

It’s up to businesses to prioritise which industry issues they tackle through the application of this form of digitisation; for example within the food retail sector, food waste has received high levels of media attention for a clear reason – there’s an ever-increasing amount of it. The world today has a growing problem with food, in that we produce enough to feed 12 billion people – far in excess of the 7 billion worldwide population – yet more than 1 billion people are under fed. The UN predicts that if the planet stays on this current path, by 2050 there will be a global food crisis, and by 2027, the world could be facing a 214 trillion calorie deficit. To counter this threat, there needs to be invaluable changes that extend throughout the supply chain, such as the adoption of advanced IoT.

Approaching the food supply chain with this form of digitisation, and with a ‘business-first’ approach, retailers can work to reduce their food wastage sustainably. By layering IoT over existing infrastructure, IoT capacity can be achieved at pace with minimal downtime; and specifically within the cold food supply chain, IoT can be utilised to gain real time visibility over temperatures of units within the entirety of the supply chain. This unlocks the ability to monitor and control temperatures from your devices in real-time. By utilising this enhanced insight, visibility, control and automation to manage and maintain the temperature and performance of their refrigeration estate, this can also ensure the safety and quality of the food products within the units and ultimately help to reduce food wastage.

For example, if a retailer has the knowledge of which food products are in which unit, the temperature of that unit can be specifically tailored to suit the contents within; rather than compromising the food quality and safety because the unit was set to the lowest temperature required by the most susceptible food product – meat. This can lead to the common issue of watery yoghurt, among other things, that can drastically impact consumer satisfaction with the end-product.

Furthermore, the use of predictive maintenance and asset monitoring can avoid equipment downtime, which can have a significant impact on food wastage – instead of faulty units only being noticed by a person in store after they have already been faulty for a period of time, the technology can predict and provide insight into asset conditions, and either making automated adjustments or greatly decreasing the time it takes to repair them.

By utilising a solution such as this, retailers can decrease their food waste considerably, whilst also utilising demand side response to reduce energy consumption, another way to not only save on costs, but actually generate revenue, whilst also becoming more sustainable and lowering the negative impact on the environment.

Critically, this isn’t being achieved through a costly rip and replace model, instead through the utilisation of legacy infrastructure. It’s simply not practical for retailers to remove and redeploy entire control infrastructures across multiple locations, as the impact on both the consumer and profit would be hugely damaging. Instead, through the use of existing technology and edge-based computing, IoT capacity can be achieved at minimal cost and in the shortest time possible.

Collaborative steps forward

With the right approach to the digitisation of supply chain processes, and collaboration with multiple parties to work towards mutually beneficial outcomes, there is a clear opportunity to have a significant impact on the levels of food waste produced within the industry. The ability to enrich multiple data streams and business processes across teams can have numerous benefits that can revolutionise and strengthen business operations.

By involving key business stakeholders in the project from the start; from initial PoC through to the wider corporate objectives, this will promote the highest levels of confidence and help to transition away from the negative ‘silo’ effect. In today’s digital era, collaboration and an integrated approach to IoT is the way to ensure a successful, scalable and agile deployment for organisations, while achieving ROI and also unlocking the opportunities IoT can offer, to address the wider economic and environmental challenges.

In these challenging times for traditional media, publishers are always looking for new ways to generate income – but Salon’s latest scheme is unusual even by the industry’s standards.

By Matt Walmsley, EMEA Director at Vectra.

The publication announced in February that any reader who uses ad blockers will be barred from accessing content unless they agree to let Salon harness their computer to mine the cryptocurrency Monero. Those who argue that the publication will only raise a pittance from this initiative are missing the point. The hidden commercial value is not treating these visitors as surreptitiously outsourced virtual gold diggers, but deterring them from using ad blocking software in the first place.

There are few better deterrents than hijacking visitors’ devices to mine Monero or Bitcoin. That’s because cryptocurrency creation is seriously bad news for anyone who prizes a decent user experience: it decreases the speed and efficiency of your device, and could even damage the hardware. It’s expensive, too; in fact, it often costs more in electricity to mine Monero than the currency is actually worth.

Finally, and most worryingly, is the fact that these unwelcome visitors may gain access to systems and devices through existing backdoors or vulnerabilities. Hackers can use these systemic weaknesses to steal corporate or personal data, infiltrate networks to install ransomware and botnets – or simply re-sell their access and control to the vast network of cybercriminals active on the dark web. Meanwhile, the victims are left scratching their heads in less-than-blissful ignorance, wondering why their machine is taking an age to perform simple tasks.

Tracking the hackers

It is clear that hijacking users’ devices to mine cryptocurrency is far from being a victimless crime – although it is fast proving to be an incredibly lucrative one. In recent months we have seen companies as diverse as Tesla, YouTube, and a host of public sector organisations (including UK councils and the NHS) fall victim to cryptocurrency mining malware.

The tumble in Bitcoin’s value offers no respite from the scourge of “cryptojacking.” The volatility of these virtual currencies and the ease with which anyone can hijack unsuspecting users’ devices make an attractive proposition for criminals: all they have to do is wait, harvesting coins from thousands of bot-controlled machines, and then cash in once the currency value starts to peak again.

At Vectra, we’ve been keeping a close eye on this phenomenon as detecting cryptomining behaviour often uncovers additional, even more serious security threats. Over the last six months or so, our Cognito Artificial Intelligence (AI) threat detection and response platform has detected an increase in cryptocurrency mining on enterprise devices, closely correlating with the price of Bitcoin. Thanks to techniques incorporating AI and machine learning, Cognito alerted our customers in real-time to a variety of hidden hacks whose sole purpose was to seize control of user machines, either in targeted or opportunistic attacks, to mine Bitcoin and other cryptocurrencies.

In the course of our research, we saw that some industries are particularly prone to cryptojacking, including the education and healthcare sectors. These attacks are spread right across the world with especially high rates of Bitcoin mining detections including wealthier, highly-developed nations such as the US, UK, Switzerland, Germany, Singapore and Japan.

Fighting back

Why are these criminals being allowed to act with such apparent impunity, hijacking devices at will and without detection?

In the asymmetric world of cybersecurity professionals and bad actors, it is often the criminals who have the upper hand as they only need to “get it right” once to break in and begin their illicit work. Today, hackers often have the advantage because enterprises still, for the most part, put the majority of their cybersecurity resources into defending their perimeters.

Attempts to catch hackers that have breached their perimeter defences are often doomed to failure because criminals have become so good at cloaking malware – for example, by aping the signatures of known threats.

The fightback against the cryptocurrency miners – and, indeed, other instances of malware and Advanced Persistent Threats (APT) must begin by targeting their enduring limitation. While malware can get past perimeter defences by mimicking benign traffic, once within the network they must behave in a predictable way in order to carry out their tasks; for example, through communication using mining protocols, or the remote command and control (C2) orchestration signals to and from the mining botnet member.

These patterns of behaviour represent attackers’ key weakness; if organisations can immediately spot their tell-tale behaviours, then they will be able to isolate and eradicate them before they wreak havoc. But to do so manually is not a workable solution; it is far too slow (we’re talking about many hours and days), and you cannot cover the entirety of an enterprise network.

This is where AI and automation have such an important part to play. By harnessing the power of these technologies, the latest enterprise threat detection and response systems can find entities inside the network whose behaviours denote a sinister purpose. Another key advantage of this new breed of security system is that it eliminates the flood of security alerts associated with traditional signature-based Intrusion Detection and Prevention Systems (IDPS), many of which turn out to be time-sapping false positives.

By contrast, the new breed of AI and machine learning-powered threat detection and response system deployed inside the enterprise acts as multiple instant “tripwires” that alerts system administrators of an unwelcome visitor to the network and delivers immediate insights that identify the behaviours malware cannot hope to hide. Our own experience shows that using AI to analyse attacker behaviour improves response times by a factor of around 30 compared to traditional IDPS alert-based methods.

Cryptocurrency mining in itself is not the very worst thing that can happen to an enterprise, but the fact that hackers can access corporate networks and hijack devices so easily, and control them for so long without detection, is a sign that an enterprise is not in control of its own security, and that presents a far greater risk.

It is time for every enterprise to fight back against the botnets leaching off their processing power and electricity bills – and causing untold damage and risk in the process.

Like that clip in nearly any space-fi movie when the starship goes into hyperspace, it feels like the future is accelerating toward us in a blur. Yet IT leaders are being asked to prepare for change that may be unforeseeable from the CIO’s helm.

By Paul Mercina, Head of Innovation, Park Place Technologies.

The good news is established and emerging solutions can help usher in technologies we haven’t envisioned today. Many of them fall into the fast-gelling field of the software-defined data centre. It’s a concept gaining traction in the market, but the varying maturity levels of its components—from virtual storage to software-defined power—make adoption complex.

Expect the Unexpected

“Future proofing” was once synonymous with long-range planning—essentially, life-cycle management that enables data centre facilities and hardware investments to deliver full value before redevelopment or replacement. The definition has steadily evolved to connote a flexible, resilient architecture capable of supporting accelerated business-driven digital transformation.

Of course, product-specific choices—such as whether to go with up-and-comer Nutanix or the more established EMC for a given appliance—will remain. Those building or retrofitting data centre facilities must carefully consider cabling, power and cooling with a 6- to 15-year horizon in mind.

But even the most rigorous cost-benefit analyses, needs projections and product evaluations won’t by themselves produce a future-proof IT infrastructure. There are simply too many unknowns beyond mere capacity forecasts. Perhaps Benn Konsynski of Emory University’s Business School put it best in an MIT Sloan Management Review paper:

“The future is best seen with a running start….Ten years ago, we would not have predicted some of the revolutions in social [media] or analytics by looking at these technologies as they existed at the time….New capabilities make new solutions possible, and needed solutions stimulate demand for new capabilities.

If the IT architect or data centre team can’t know in detail what the next revolution will look like—be it in machine learning, artificial intelligence or a field existing only in the most esoteric research—how can a data centre infrastructure conceived today support it down the road?

Enter SDDC

The software-defined data centre (SDDC), in which infrastructure is virtual and delivered via pooled resources “as a service,” has promised to deliver the sought-after agility that businesses require. It offers many advantages in this regard. Specifically, it does the following:

Flexible, agile and capable of supporting true ITaaS and rapid or continuous deployment (CD), SDDC may be ideal for businesses needing to “fail fast and fix it” in order to capture or maintain competitive advantage.

The Long and Winding SDDC Roadmap

As a concept, SDDC reaches back to at least 2012, when it became a hot topic at storage and networking conferences. But it’s an evolving field on which many IT pros have nonetheless pinned their hopes.

In 2015, Gartner analyst Dave Russell cautioned that “[d]ue to its current immaturity, the SDDC is most appropriate for visionary organisations with advanced expertise in I&O engineering and architecture.” At the same time, Gartner predicted 75% of Global 2000 enterprises will require SDDC by 2020—putting us about halfway along the trajectory to widespread adoption, at least among large businesses. A 2016 Markets and Markets study supported such a “hockey stick” growth chart, projecting a 26.5% CAGR through 2021 to reach $83.2 billion.

By most measures, SDDC is at a transitional phase, where virtual servers and hyperconverged appliances are maturing. But pushing the software-defined model to less charted realms, such as software-defined power and self-aware cooling, remains mostly on the whiteboard.

A conservative adoption strategy can track the changing status of emerging technologies, leaving experimentation to those with the funds and inclination to undertake it and leaving time for winning and losing technologies in each area to shake out. The approach provides greater clarity for those positioning themselves a step back from the bleeding edge.

Server virtualisation, for example, is reaching its peak and has become the de facto choice for organisations of all sizes. Software-defined storage falls in line as well, with technologies frequently offering a favourable upgrade pathway from traditional solutions.

Software-defined networking is gaining stability as the explosion in global IP traffic (projected by Cisco to reach 3.2 zettabytes by 2021), which coincides with rapid growth of east-west data centre traffic, is necessitating advancements. Technologies such as VMware’s NSX and NFV are by deemed by most experts to be mature enough to serve as a primary software-defined-networking backbone.

These developments provide viable SDDC entry points for the enterprise, as well as for IT professionals who want to future-proof their skills. For instance, server virtualisation is no longer optional. Reaching 90% virtualisation is a good indicator that the enterprise is ready for the transition to SDDC.

From there, converged racks of software-defined storage, networking, and computer or hyperconverged appliances are also enterprise ready. Hyperconvergence, whether in its current vendor-specific form or a more vendor-agnostic iteration, will likely be a requirement as enterprises absorb the flood of data from IoT, respond to accelerating market changes with continuously deployed solutions, and add capacity to serve internal and external customers. Its power comes from being preconfigured and engineered for scalability by adding interoperable modules under a hypervisor for Lego-esque expansion.

Despite its potential, the transition to hyperconvergence may be incomplete until 2025 or later. Even then, the chances for 100% hyperconvergence are essentially nil, as it’s inappropriate for some applications. For example, ROI is best for central workloads but dwindles at smaller scales. As edge computing takes hold, IoT-centric and other technologies that cannot be incorporated into such a virtual box will temper hyperconvergence adoption.

SDDC’s Final Frontier?

An important next stage in SDDC’s implementation will be to bring total virtualisation to the data centre to achieve the full potential of “software-defined everything.” Through virtualisation, utilisation rates and equipment densities have multiplied. In a sense, the space problem in the data centre has been solved. Miniaturisation via on-premises hyperconvergence, especially when paired with cloud overflow, is nearing par with increased demand.

With these changes, the pressure on power and cooling systems has only grown. Emerging possibilities in the area of software-defined power could soon enable use of spare or stranded capacity. With dynamic redundancy, power systems currently experiencing only 50% utilisation may be able to approach 100%, yielding operational and capital savings. Although such developments have been primarily vaporware to date, hyperscaler technology and data centre infrastructure management (DCIM) are just now becoming able to commercialise this next stage of data centre virtualisation.

The Bottom Line