Okay, so no one will buy into a brand new ICT technology without doing some major research and testing, but there’s little doubt that organisations are under increasing pressure to deploy new solutions in a much shorter timeframe than previously. And I’m not talking about the actual time taken between acquiring and commissioning some new servers or a new application. No, I mean that, where once a technology breakthrough would take years to gain wide-scale acceptance (think about how long Solid State Disk was around before it ‘hit the big time’), because end users were ‘suspicious’ of the new thinking (and yes, the high price tag played a part as well); now, no sooner has something been launched, than there’s real pressure on organisations to start deploying it, if only to stay competitive in the market.

Now, the ‘old-fashioned’ way, as typified by the delightful term ‘server-hugging’, seems remarkably sluggish and uncommercial to our digital eyes. Why hold on to slow, unreliable and very costly bits of metal, when they are being replaced by much faster, much more reliable and less expensive alternatives? Well, in truth, the fear culture held sway. Buying tried and tested technology was the safe option. It might not be the best value for money, but it had served the organisation well for so many years, why ‘risk’ change?

And to the IT traditionalists, the modern way of investing in any and every latest technology development smacks of a high risk strategy that could fall apart at the seams if the solutions prove to be rather underwhelming once the hype surrounding them starts to fall apart.

Everyone talks about the tech start-ups who turned the received wisdom on its head and succeeded on a spectacular scale. And almost every industry sector now has one or more major player that is a pure play digital company, as opposed to a legacy organisation that is trying to get to grips with the online, always connected world. So, embracing new ideas and technologies clearly can work, but it’s worth remembering that many of these digital-only organisation were a long time in the planning, and required significant investment funding, plenty of which is still to be paid back.

In summary, there are no short cuts to business success in the digital world, and the recent example of Uber in London makes it clear that people are just as crucial as technology when it comes to business transformation. So, don’t be panicked into making unwise technology investments. Take the time to understand your market, what your customers are wanting from you now, and only then begin to acquire the technologies and personnel to make sure that your business stays relevant, or, better still, leads your industry.

One in four IT organizations in Europe is already operating tiered applications spanning on- and off-premise environments. However, only a few pathfinders are making the necessary technology and process adjustments to make this viable in the long run, according to a recent IDC survey of more than 800 IT and line-of-business decision makers in 11 European countries.

IDC confirmed that the most typical environment spanning across clouds involves front-end applications hosted on a public cloud, connecting to back-end systems located on-premise — 31% of the sample reported this approach. The other approach was "bursting" capacity into off-premise (8% of the sample, but fast-growing compared with past research). 40% of the respondents segregated on- versus off-premise environments and 20% said they only run applications on-premise.

"Connecting cloud environments with ad hoc bridges in a hybrid fashion won't be enough in 2018. Nor will standardizing on one external provider, at least for large or innovative companies. Developers and line of business require 'best of breed,' and the purchasing department wants to avoid being locked in," said Giorgio Nebuloni, research director, IDC European Infrastructure Group.

According to the survey, carried out in May 2017, only 20% of the line-of-business representatives interviewed agreed that standardizing on one or two large IaaS/PaaS or SaaS providers would work, versus more than 30% among IT respondents. Adding a software layer to protect against cloud lock-in was the most commonly adopted strategy, according to both IT and line of business.

"Digital innovators such as ING Bank and Siemens want to consume cloud content from several cloud locations while maintaining maximum flexibility. This gives incredible freedom to users, but it creates challenges for CIOs. We believe a multicloud strategy based on hiring staff with negotiation skills, expanding investments in automation software, and revising cross-country connectivity options is a must for IT departments supporting innovative organizations," said Nebuloni.

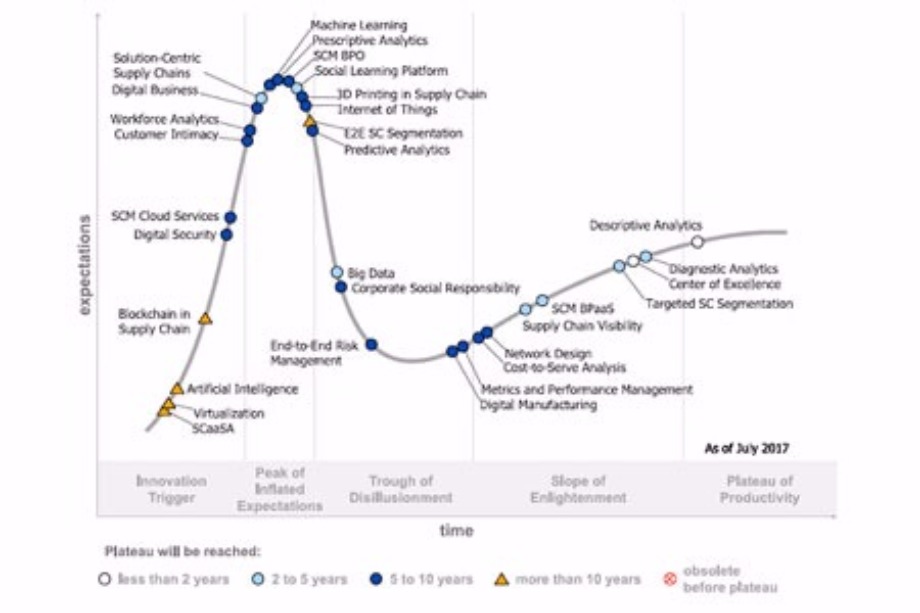

"We expect several new technologies and strategies to further digitalize organizations by transforming the supply chain," said Noha Tohamy, research vice president and distinguished analyst at Gartner. "In this year’s Hype Cycle (see Figure 1) we’ve picked out several technologies and strategies that should be on the supply chain leader’s radar, as they will reach maturity in the next five years."

Figure 1. Hype Cycle for Supply Chain Strategy, 2017

Source: Gartner (September 2017)

At the Peak

Gartner expects two entries to enter mainstream use in the next two to five years:

Social learning platforms address the large number of long-term employees retiring, capturing their knowledge to share with younger workers in a way that can be scaled across multiple business units and geographies. A more fluid and continuous learning experience that appeals to younger employees has obvious benefits, but chief supply chain officers (CSCOs) should avoid a siloed ‘shadow IT’ approach. Instead, they should integrate social learning within the context of an organization-wide IT program to maximize the benefits, but to be feasible for most organizations will take between two to five years.

Solution-centric supply chains (SCSCs) offer customers a personalized collection of products, data and services from a digitally-enabled ecosystem of partners. It’s an approach seen mainly in high-tech, medical, consumer and industrial sectors at the current time.

In the Trough

In this phase Gartner expects big data to achieve mainstream maturity in the next two to five years.

Some supply chain organizations have piloted big data technologies to use larger datasets, and others are incorporating structured data from external sources or trading partners in areas like collaborative demand fulfillment and supplier performance management.

There is a now a post-hype realization that more data does not necessarily equate to better insights. Today, big data is seen as an enabler, but organizations are focusing on improving analytics and integration to drive big data strategies to productive mainstream use.

On the Slope

There are five technologies on the slope that Gartner expects to mature fully within the next five years.

Supply chain visibility (SCV) and centers of excellence (COEs) are competencies that will soon be standard business practice. SCV is about generating timely, accurate and complete views of plans, events and data across the entire supply chain including external partners. Many organizations currently lack an end-to-end approach to SCV, but as more mature and capable Internet of Things (IoT), data and analytics solutions become available, SCV will head toward mass adoption within two to five years.

A COE develops ways to find, design, develop and implement best practices across the business. While Gartner research indicates that 78 percent of supply chain organizations have one or more COE, there are reasons why the COE still hasn’t reached the plateau. One is that where COEs have been adopted, they often lack structure due to weak mandates, uncertain missions and lack of clear governance and performance metrics. As more organizations advance their expertise, Gartner expects the COE to move to productive mainstream use within two years.

Diagnostic analytics in the supply chain seeks to explain why something — an event or a trend — happened. Diagnostic analytics lags the adoption of descriptive analytics, which has reached the plateau phase of the cycle. This is because diagnostic analytics requires a clear understanding of the intertwined relationships in a supply chain, which must first be provided by descriptive analytics. Improvements in the maturity of analytics solutions are now contributing to wider adoption, as well as the increased availability and integration of real-time data in IoT-enabled supply chains.

Supply chain management (SCM) business process as a service (BPaaS) is an external service that delivers standardized processes through a cloud-sourced technology platform. Examples include compliance and regulatory reporting, freight forwarding, customs processing and aftermarket services.

The time is right for supply chain leaders to monitor the SCM BPaaS market for opportunities to gain incremental capabilities and efficiencies in their organization without needing to license new software or hire new employees.

Targeted supply chain segmentation is a technique used in business for decade. Examples of segmentation include categorizing customers or suppliers as high priority or treating parts or inventory differently based on volume. Gartner expects that within two to five years there will exist a documented consensus approach to targeted supply chain segmentation that will drive mainstream adoption.

Reached the Plateau

Descriptive analytics is the application of analytics to describe what is happening, or has happened. It is the only technology that has reached the plateau of productivity in this Hype Cycle.

While some organizations still use enterprise wide business intelligence tools from other business units like sales or finance, generating reports this way is too time intensive and does not provide the right level of insight in a timely manner.

Descriptive analytics capabilities spanning reporting, dashboards, supply chain visibility, data visualization and alerts are already improving the level of insight for many organizations, meaning mainstream adoption is less than two years away.

"Looking further out than five years, we can even more exciting technologies coming over the horizon," said Ms. Tohamy. "We expect that artificial intelligence, machine learning, corporate social responsibility and cost-to-serve analytics will all drive significant shifts in supply chain strategies within the next decade."

IoT boosting spending on storage, edge, infrastructure and cloud.

451 Research unveils the latest trends in IoT use and finds that the massive amounts of data generated by IoT is already having a significant impact on enterprise IT. In the latest Voice of the Enterprise: IoT – Workloads and Key Projects, analysts find that organizations deploying IoT are planning increases in storage capacity (32.4%), network edge equipment (30.2%), server infrastructure (29.4%) and off-premises cloud infrastructure (27.2%) in the next 12 months, to help manage the IoT data storm.

Analysts find that spending on IoT projects remains solid, with 65.6% of respondents planning to increase their spending in the next 12 months and only 2.7% planning a reduction.

Today, IT-centric projects are the dominant IoT use cases, particularly datacenter management and surveillance and security monitoring. Two years out, however, facilities automation will likely be the most popular use case, and line-of-business-centric supply chain management is expected to jump from number 6 to number three.

Finding IoT-skilled workers remains a challenge since the last IoT survey in 2016, with almost half of respondents saying they face a skills shortage for IoT-related tasks. Data analytics, security and virtualization capabilities are the skills most in demand.

451 Research finds that the collection, storage, transport and analysis of IoT data is impacting all aspects of IT infrastructure. Most companies say they initially store (53.1%) and analyze (59.1%) IoT data at a company-owned datacenter. IoT data remains stored there for two-thirds of organizations, while nearly one-third of the respondents move the data to a public cloud.

Researchers find that, once IoT data moves beyond operational and real-time uses and the focus is on historical use cases such as regulatory reporting and trend analysis, cloud storage gives organizations greater flexibility and often significant cost savings for the long term.

Despite this centralization of IoT data, the survey also finds action at the edge. Just under half of respondents say they do IoT data processing – including data analysis, data aggregation or data filtering – at the edge, either on the IoT device (22.2%) or in nearby IT infrastructure (23.3%).

“Companies are processing IoT workloads at the edge today to improve security, process real-time operational action triggers, and reduce IoT data storage and transport requirements,” said Rich Karpinski, Research Director for Voice of the Enterprise: Internet of Things. “While some enterprises say that in the future they will do more analytics – including heavy data processing and analysis driven by big data or AI – at the network edge, for now that deeper analysis is happening in company-owned datacenters or in the public cloud.”

The European Round Table of Industrialists (ERT) has published its report on building and transforming skills for a digital world.

Gigamon VP of Corporate Marketing, Julie Gibbs, discusses how Gigamon's Visibility Platform is the key to securing, managing & understanding the traffic on your network.

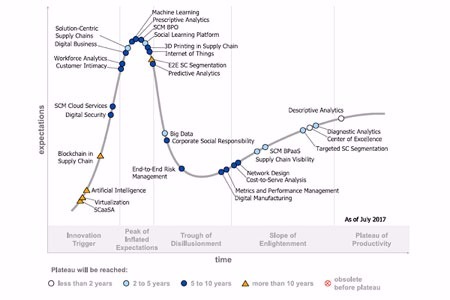

Rapid growth in cloud adoption is driving increased interest in securing data, applications and workloads that now exist in a cloud computing environment. The Gartner, Inc. Hype Cycle for Cloud Security helps security professionals understand which technologies are ready for mainstream use, and which are still years away from productive deployments for most organizations (see Figure 1.)

"Security continues to be the most commonly cited reason for avoiding the use of public cloud," said Jay Heiser, research vice president at Gartner. "Yet paradoxically, the organizations already using the public cloud consider security to be one of the primary benefits."

The attack resistance of the majority of cloud service providers has not proven to be a major weakness so far, but customers of these services may not know how to use them securely. "The Hype Cycle can help cybersecurity professionals identify the most important new mechanisms to help their organizations make controlled, compliant and economical use of the public cloud," added Mr. Heiser.

Figure 1. Hype Cycle for Cloud Security, 2017

At the Peak

The peak of inflated expectations is a phase of overenthusiasm and unrealistic projections, where the hype is not matched by successful deployments in mainstream use. This year the technologies at the peak include data loss protection for mobile devices, key management as-a-service and software-defined perimeter. Gartner expects all of these technologies will take at least five years to reach productive mainstream adoption.

In the Trough

When a technology does not live up to the hype of the peak of inflated expectations, it becomes unfashionable and moves along the cycle to the trough of disillusionment. There are two technologies in this section that Gartner expects to achieve mainstream adoption in the next two years:

Disaster recovery as a service (DRaaS) is in the early stages of maturity, with around 20-50 percent market penetration. Early adopters are typically smaller organizations with fewer than 100 employees, which lacked a recovery data center, experienced IT staff and specialized skills needed to manage a DR program on their own.

Private cloud computing is used when organizations want to the benefits of public cloud — such as IT agility to drive business value and growth — but aren’t able to find cloud services that meet their needs in terms of regulatory requirements, functionality or intellectual property protection. The use of third-party specialists for building private clouds is growing rapidly because the cost and complexity of building a true private cloud can be high.

On the Slope

The slope of enlightenment is where experimentation and hard work with new technologies are beginning to pay off in an increasingly diverse range of organizations. There are currently two technologies on the slope that Gartner expects to fully mature within the next two years:

Data loss protection (DLP) is perceived as an effective way to prevent accidental disclosure of regulated information and intellectual property. In practice, it has proved more useful in helping identify undocumented or broken business processes that lead to accidental data disclosures, and providing education on policies and procedures. Organizations with realistic expectations find this technology significantly reduces unintentional leakage of sensitive data. It is relatively easy, however, for a determined insider or motivated outsider to circumvent.

Infrastructure as a service (IaaS) container encryption is a way for organizations to protect their data held with cloud providers. It’s a similar approach to encrypting a hard drive on a laptop, but it is applied to the data from an entire process or application held in the cloud. This is likely to become an expected feature offered by a cloud provider and indeed Amazon already provides its own free offering, while Microsoft supports free BitLocker and DMcrypt tools for Linux.

Reached the Plateau

Four technologies have reached the plateau of productivity, meaning the real-world benefits of the technology have been demonstrated and accepted. Tokenization, high-assurance hypervisors and application security as a service have all moved up to the plateau, joining identity-proofing services which was the only entrant remaining from last year’s plateau.

"Understanding the relative maturity and effectiveness of new cloud security technologies and services will help security professionals reorient their role toward business enablement," said Mr. Heiser. "This means helping an organization’s IT users to procure, access and manage cloud services for their own needs in a secure and efficient way."

Total worldwide enterprise storage systems factory revenue was up 2.9% year over year and reached $10.8 billion in the second quarter of 2017 (2Q17), according to the International Data Corporation (IDC) Worldwide Quarterly Enterprise Storage Systems Tracker.

Total capacity shipments were up 16.5% year over year to 65.3 exabytes during the quarter. Revenue growth increased within the group of original design manufacturers (ODMs) that sell directly to hyperscale datacenters. This portion of the market was up 73.5% year over year to $2.5 billion. Sales of server-based storage declined 13.4% during the quarter and accounted for $2.9 billion in revenue. External storage systems remained the largest market segment, but the $5.3 billion in sales represented a decline of 5.4% year over year.

"The enterprise storage market finished the second quarter of 2017 on a positive note, posting modest year-over-year growth and the first overall growth in several quarters," said Liz Conner, research manager, Storage Systems. "Traditional storage vendors continue to expand their product portfolios to take advantage of the market swing towards All Flash and converged/hyperconverged systems. Meanwhile, hyperscalers saw new storage initiatives and event-driven storage requirements lead to strong growth in this segment during the second quarter."

2Q17 Total Enterprise Storage Systems Market Results, by Company

HPE/New H3C Group held the number 1 position within the total worldwide enterprise storage systems market, accounting for 20.1% of spending. Dell Inc held the next position with a 18.4% share of revenue during the quarter. NetApp finished third with 6.4% market share. IBM finished in finished in fourth position, capturing 5.2% of global spending, and Hitachi rounded out the top 5 with 3.8% market share. As a single group, storage systems sales by original design manufacturers (ODMs) selling directly to hyperscale datacenter customers accounted for 23.3% of global spending during the quarter.

| Top 5 Vendor Groups, Worldwide Total Enterprise Storage Systems Market, Second Quarter of 2017 (Revenues are in US$ millions) | |||||

| Company | 2Q17 Revenue | 2Q17 Market Share | 2Q16 Revenue | 2Q16 Market Share | 2Q17/2Q16 Revenue Growth |

| 1. HPE/New H3C Group b c | $2,170.3 | 20.1% | $2,500.2 | 23.8% | -13.2% |

| 2. Dell Inc a | $1,993.0 | 18.4% | $2,717.1 | 25.9% | -26.7% |

| 3. NetApp | $694.6 | 6.4% | $595.4 | 5.7% | 16.7% |

| 4. IBM | $566.3 | 5.2% | $568.5 | 5.4% | -0.4% |

| 5. Hitachi | $412.6 | 3.8% | $429.0 | 4.1% | -3.8% |

| ODM Direct* | $2,520.2 | 23.3% | $1,452.6 | 13.8% | 73.5% |

| Others | $2,451.3 | 22.7% | $2,238.1 | 21.3% | 9.5% |

| All Vendors | $10,808.2 | 100.0% | $10,500.9 | 100.0% | 2.9% |

| Source: IDC Worldwide Quarterly Enterprise Storage Systems Tracker, September 14, 2017 | |||||

Inaugural quarterly report from DigitalOcean called DigitalOcean Currents reveals developers’ key priorities.

A new quarterly report from DigitalOcean, the cloud for developers highlights that despite mainstream attention around multi-cloud solutions, 70 percent of respondents have no plans to implement it in the next year.Despite Gartner predicting that 90 percent of organisations will adopt hybrid infrastructure management by 2020, the survey of 1,000 respondents from across the world reveals the reality of developers and system admins working in wide range of industries.

“There is a lot of available data on programming trends, but what is unique about DigitalOcean is that we are the only cloud platform provider that is truly developer-first,” said Shiven Ramji, VP of Product, DigitalOcean. “This gives us a unique perspective on how specific segments of the developer community are thinking and feeling with regard to the tools they like to use and where they want to spend their time.”

The survey also includes a special focus on developers’ use of and requirements for object storage, in conjunction with the company’s launch of its long-awaited object storage solution: Spaces. While the survey found that less than half (45 percent) of respondents are currently using object storage as a way to handle to explosive growths of different types of data, the majority (53 percent) have researched a solution in the past five years — indicating a growing general interest in this type of storage. Respondents said the three main benefits over other storage solutions are cost-effectiveness (30 percent), scalability (29 percent) and ease of data retrieval.

Additional findings from DigitalOcean Currents:

MySQL reigns supreme: For database software, MySQL is the most popular solution, with 35 percent preferring it, followed by PostgreSQL (26 percent) and MariaDB (19 percent); Linux Popularity: Developers and sysadmins spend more time using Linux (38.6 percent) compared to 36.4 percent who use MacOS and only 23.1 percent using Windows – showing the differences for the developer segment compared to the overall market share and reflecting the time they spend in server environments;Developers Prefer to Learn Online: Books used to be how many developers learned to code, but that’s no longer the case. Online tutorials and official documentation far outpace books as the preferred learning method for developers, with 80 percent preferring these resources.

Valec is an organisation which builds, maintains and operates Brazilian railways. The public company manages three railways: the North-South Railway, the West-East Integration Railway and the Centre-West Integration Railway. The organisation plays a major role in developing infrastructure across a very large territory in order to support the Brazillian economy.

Valec is currently in the process of building the North-South Railway, which when completed will run from Belém in the North to the southernmost city in Brazil, Río Grande, enabling the movement of valuable commodities, such as ethanol, soya and metals. 1575 km of this railway is already in operation, but Valec is still building round 700km of the track. A control centre facility, based in Palmas, coordinates work from Porto Nacional to Estrela d’Oeste, controlling the movement of maintenance vehicles, ensuring that all building and engineering tasks run according to plan. Trains are using sections of the track to transport goods, while mechanics, engineers, builders, and other workers are all posted at different points down the length of the track to undertake construction work and maintenance on specific sections of the railway. Numerous maintenance vehicles drive up and down the line, managing the railway, delivering vital raw materials and moving workers.

Considering the sheer distances involved and the scale of the work at hand, communication between their vehicles, trains and control centre is key. Despite this, Valec’s communications were previously hampered by intermittent terrestrial connectivity, older radio technology and a paper system, whereby drivers would be given a ‘license’ from the control centre, which would specify a beginning and end point for their journey and cargo.

This system was problematic on several levels – for one thing, it did not allow the control centre facility to have any real feedback on what its vehicles were doing. It also restricted the agility of Valec – rather than being able to react flexibly to changing events happening along the line and being able to adjust resource allocation accordingly by communicating with drivers, it instead left the team with only the paper-based system as a guarantee of their whereabouts. Moreover, not being able to see where drivers were in real time represented a safety and health issue, as these drivers were travelling very long distances to remote locations on a daily basis. For the operators of trains using these stretches of lines, there was also an economic cost involved, as trains – which can be up to 1km long - were using a lot of diesel stopping and starting again.

Suppliers of managed services are taking on more responsibilities as they become the driving force for IT industry growth, delegates at the Managed Services & Hosting Summit were told in London this week. More than two hundred managed services providers (MSPs) and aspiring providers of managed services were advised their need to become rounded providers of business productivity, by adopting the right mindset, getting more training on key issues and by working together to offer a more comprehensive range of services.

Mark Paine, Gartner Research Director told the conference that service providers had to take account of the changing attitudes of buyers by focusing on the business outcomes and the raised expectations among buyers now that IT has had to become a productivity asset for the business. And they need not try to do it all themselves - partners can co-operate to address the wider market requirements, he said. MSPs had to carefully choose go-to-market partners that can talk both technology and business; and they had to start with a vision; look at use cases; and consider processes, challenges and outcomes for their customers.

Customer acquisition costs (CAC) versus lifetime value (LTV) also had to be brought into the equation, considering margins, partnering agreements and questions as to who owns the invoice, plus the on-going up-selling/cross-selling opportunities. The rewards were there through continuing revenues and repeatable business, as successful MSPs have shown. The rewards for getting it right are substantial, Mark Paine says, including valuable access to customers' ecosystems, becoming a pivotal part of customers' success stories, and benefiting from lead sharing with technology partners.

The added benefit of recurring revenue from cloud services is substantial and this was echoed at the Summit by Michael Frisby, Managing Director of Cobweb Solutions, which sells cloud solutions from Microsoft, Mimecast, Acronis and others. Frisby said: “We became a cloud managed service provider from originally focusing on traditional solutions like Exchange, and 95% of our revenues are now recurring. But you have to make sure you get the marketing right to do it.” Continuing training and education of the MSP were essential, he added, pointing out that Cobweb itself had boosted its investment in this four-fold in the last year.

Other issues covered included the growing importance of compliance particularly with regard to GDPR: legal firm Fieldfisher's partner Renzo Marchini warned that the rules were changing and the impact on MSPs would be profound. Service providers are to be regarded as 'data controllers' under GDPR, with that comes the potential for huge fines in the event of regulation breaches.

The theme of partnering was confirmed later in a round-table discussion when the Global Technology Distribution Council’s European head, Peter van den Berg, outlined how distributors were investing heavily in services and education. Security was also a key issue, with the service provider very much in the firing line in the event of any incident. Datto revealed some of the latest research from its global survey: Business Development Director Chris Tate revealed that “87% of our partners have had to deal with a ransomware attack on behalf of their clients.” Earlier, SolarWinds MSP had shown how all-pervading the issue was becoming in discussions with customers, and how MSPs needed to increase their understanding, particularly in relation to the challenges faced by smaller businesses.

The Managed Services & Hosting Summit, now in its seventh year, was also a platform for other news: Kaspersky's Global Product Manager Oleg Gorobets made the case for its growing programme for better security among MSPs. But there was a general air of optimism with the prospect of vast fields of data and an increasingly information-rich environment produces new sales and growth opportunities for the MSP according to David Groves, Director of Product Management, Maintel speaking on behalf of sponsor Highlight.

M&A specialist Hampleton’s Director, David Riemenschneider, demonstrated that these opportunities and the surge in demand in such areas as AI and security could yield rich rewards for MSPs building value into their own business while addressing the need to create value for their clients. He pointed to heightened interest in services providers, especially those with security, financial services or automotive expertise.

Managed Services & Hosting Summit 2017 (www.mshsummit.com ) sponsors included: Datto, Highlight, Kaspersky, Mimecast, SolarWinds MSP, Autotask, Cisco Umbrella, ConnectWise, DataCore Software, ESET, ForcePoint, ITGlue, Kingston Technology, Nakivo, WatchGuard, 5NineSoftware, Altaro, APC by Schneider Electric, Beta Distribution, Continuum, Deltek, Egenera, F-Secure, Identity Maestro, iland, Barracuda MSP, Kaseya, RapidFire Tools, SpamExperts, Webroot and Wasabi.

Many of the themes and issues addressed in this week’s London event will be developed further in the second annual European Managed Services & Hosting Summit which, it has just been announced, will be staged in Amsterdam on 29 May 2018. (www.mshsummit.com/amsterdam )

Following assessment and validation from the panel at Angel Business Communications. The shortlist for the 24 categories in this year’s SVC Awards has been put forward for online voting by our readership.

Voting is free of charge and must be made online at www.svcawards.com

The SVC Awards celebrate achievements in Storage, Cloud and Digitalisation, rewarding the products, projects and services as well as honouring companies and teams. The SVC Awards recognise the achievements of end-users, channel partners and vendors alike and in the case of the end-user category there will also be an award made to the supplier who nominated the winning organisation.

Voting remains open until 3 November so there is time to make your vote count and express your opinion on the companies that you believe deserve recognition in the SVC arena.

The winners will be announced at a gala ceremony on 23 November at the Hilton London Paddington Hotel.

Welcoming both the quantity and quality of the 2017 SVC Awards shortlist entries, Jason Holloway, Director of IT Publishing & Events at Angel, said: “I’m delighted that we have this annual opportunity to recognise the innovation and success of a significant part of the IT community. The number of entries, and the quality of the projects, products and people they represent, demonstrate that the SVC Awards continue to go from strength to strength and fulfil an important role in highlighting and recognising much of the great work that goes on in the industry.”

All voting takes place on line and voting rules apply. Make sure you place your votes by 3 November when voting closes. Visit : www.svcawards.com

Storage Project of the Year

Cohesity supporting Colliers International

DataCore Software supporting Grundon Waste Management

Mavin Global supporting The Weetabix Food Company

Cloud / Infrastructure Project of the Year

Axess Systems supporting Nottingham Community Housing Association

Correlata Solutions supporting insurance company client

Navisite supporting Safeline

Hyper-convergence Project of the Year

HyperGrid supporting Tearfund

Pivto3 supporting Bone Consult

UK Managed Services Provider of the Year

EACS

EBC Group

Mirus IT Solutions

netConsult

Six Degrees Group

Storm Internet

Vendor Channel Program of the Year

NetApp

Pivot3

Veeam Software

International Managed Services Provider of the Year

Alert Logic

Claranet

Datapipe

Backup and Recovery / Archive Product of the Year

Acronis – Backup 12.5

Altaro Software – VM Backup

Arcserve - UDP

Databarracks – DraaS, BaaS, BCaaS solutions

Drobo – 5N2

NetApp – BaaS solution

Quest – Rapid Recovery

StorageCraft – Disaster Recovery Solution

Tarmin – GridBank

Cloud-specific Backup and Recovery / Archive Product of the Year

Acronis – Backup 12.5

CloudRanger – SaaS platform

Datto – Total Data Protection platform

StorageCraft – Cloud Services

Veeam Software - Backup & Replication v9.5

Storage Management Product of the Year

Open-E – JovianDSS

SUSE – Enterprise Storage 4

Tarmin – GridBank Data Management platform

Virtual Instruments – VirtualWisdom

Software Defined / Object Storage Product of the Year

Cloudian – HyperStore

DDN Storage – Web Object Scaler (WOS)

SUSE – Enterprise Storage 4

Software Defined Infrastructure Product of the Year

Anuta Networks – NCX 6.0

Cohesive Networks – VNS3

Runecast Solutions – Analyzer

Silver Peak – Unity EdgeConnect

SUSE – OpenStack Cloud 7

Hyper-convergence Solution of the Year

Pivot3 - Acuity Hyperconverged Software Platform

Scale Computing - HC3

Syneto - HYPERSeries 3000

Hyper-converged Backup and Recovery Product of the Year

Cohesity – DataProtect

ExaGrid - HCSS for Backup

Syneto - HYPERSeries 3000

PaaS Solution of the Year

CAST Highlight - CloudReady Index

Navicat – Premium

SnapLogic - Enterprise Integration Cloud

SaaS Solution of the Year

Adaptive Insights – Adaptive Suite

Impartner – PRM

IPC Systems - Unigy 360

Ixia - CloudLens Public

SaltDNA - Secure Enterprise Communications

x.news information technology gmbh – x.news

IT Security as a Service Solution of the Year

Alert Logic – Cloud Defender

Barracuda Networks - Essentials for Office 365

SaltDNA - Secure Enterprise Communications

Votiro - Content Disarm and Reconstruction technology

Cloud Management Product of the Year

CenturyLink - Cloud Application Manager

Geminaire - Resiliency Management Platform

Highlight - See Clearly - Business Performance Acceleration

HyperGrid – HyperCloud

Rubrik – CDM platform

SUSE - OpenStack Cloud 7

Zerto - Virtual Replication

Storage Company of the Year

Acronis

Altaro Software

DDN Storage

NetApp

Virtual Instruments

Cloud Company of the Year

Databarracks

Navisite

Six Degrees Group

Storm Internet

Hyper-convergence Company of the Year

Cohesity

Pivot3

Syneto

Storage Innovation of the Year

Acronis - Backup 12.5

Altaro Software - VM Backup for MSP’s

DDN Storage - Infinite Memory Engine

Excelero – NVMesh

Nexsan – Unity

Cloud Innovation of the Year

CloudRanger – Server Management platform

IPC Systems - Unigy 360

SaltDNA - Secure Enterprise Communications

StaffConnect - Mobile App Platform

Zerto - ZVR 5.5

Hyper-convergence Innovation of the Year

Pivot3 - Acuity HCI Platform

Schneider Electric - Micro Data Centre Solutions

Syneto - HYPERSeries 3000

Digitalisation Innovation of the Year

Asperitas – Immersed Computing

IGEL - UD Pocket

Loom Systems - AI-powered log analysis platform

MapR – XD

For more information and to vote visit: www.svcawards.com

There was a time Handle Financial thought all that could be done in the cloud was development and QA work. The idea of moving their electronic cash transaction network out of traditional on-premise systems was sort of a pipe dream (pun intended) – the cloud market just wasn’t mature enough.

But as AWS gained traction and performance and security improved, moving to the cloudbecame a real possibility, and a potential competitive differentiator. Fast-forward to today, and Handle Financial is 99.5 percent in the cloud.

Handle Financial has built a payment network that empowers users to pay rent, utility bills, repay loans, buy tickets and to much more with cash, and consumers can make payments on their own schedule at more than 17,000 trusted locations including 7-Eleven and Family Dollar stores across the U.S.

A move to the cloud would make them nimbler; more agile.

Their cloud journey started on AWS theming Elastic Load Balancers. But scaling to such a large volume – they’re running 28 ELBs – became costly and they lost some technical capabilities that they depended on, such as being unable to theme static IPs, which means partners couldn’t white list them. Theming ELBs also forced them to sacrifice SSL client-server authentication. And they couldn’t manage ELBs individually.

Those limitations brought Handle Financial to A10 Networks. They had been an A10 Networks customer previously, and had experienced business advantages. This time round, they wanted to move to the cloud and eliminate the costs and resource constraints that come with managing and maintaining hardware.

And leveraging Harmony Controller and Lightning ADC will help them in the future as well. They recently acquired a bill payment app, which runs in Microsoft Azure. Harmony’s ability to bridge multiple clouds means it can support our AWS environment and this new Azure environment simultaneously. And they can add other clouds, along with traditional on-premise environments as they'll. That’s powerful.

Pioneer in Cloud Data Management to work with team to accelerate the protection of race data.

Mercedes-AMG Petronas Motorsport confirms a new team partnership with Rubrik, specialist in Cloud Data Management.

With data volumes, backup and recovery requirements becoming ever more demanding in Formula One, the team is investing in class-leading technology in order to stay ahead. Specifically, the team will be using a multi-node Rubrik cluster at their Brackley headquarters to protect the team’s critical race data.

The team will also use Rubrik’s REST API (Application Programming Interface) to integrate with their current tools to analyse their data utilisation. With this information, the team expects to become even more efficient in how it manages and utilises the vast volumes of race data.

“We are delighted to welcome Rubrik to Mercedes-AMG Petronas Motorsport,” commented Toto Wolff, Head of Mercedes-Benz Motorsport. “In the fast-moving world of information technology, it’s essential to be right at the forefront, particularly for us in the area of data management, and we look forward to working with Rubrik to maximise our potential in this area.”

“Mercedes-AMG Petronas Motorsport is at the forefront of adopting new technologies within the racing world,” said Bipul Sinha, co-founder and CEO, Rubrik. “Rubrik’s cloud data management platform will enable the team to access and manage critical race information, providing them with a new competitive edge. We are excited to partner with Mercedes-AMG Petronas Motorsport to turbocharge their innovative approach to data management and contribute to their continued success.”

Global publisher uses Datapipe’s global expertise to expand its infrastructure to China and move infrastructure onto public cloud.

BMJ, a global healthcare knowledge provider, has used Datapipe’s expertise to launch its hybrid multi-cloud environment and enter the Chinese market using Alibaba Cloud. The announcement follows last year’s partnership in which BMJ implemented a DevOps culture and virtualised its IT infrastructure with Datapipe’s private cloud.

In 2016, Datapipe announced it was named a global managed service provider (MSP) partner of Alibaba Cloud. Later that year, it was named Asia Pacific Managed Cloud Company of the Year by Frost & Sullivan. BMJ opened a local Beijing office in 2015, and its attraction to engaging Datapipe’s services at the outset was due to Datapipe’s on-the-ground support in China and knowledge of the local Chinese market.

Sharon Cooper, BMJ’s Chief Digital Officer says, “We see the People’s Republic of China as a key part of our growing international network. Therefore, we needed the technical expertise to be able to expand our services into China, and a partner to help us navigate the complex frameworks required to build services there. Datapipe, with its on-the-ground support in China and knowledge of the market has delivered local public-cloud infrastructure utilising Alibaba Cloud.”

Last year, BMJ used Datapipe’s expertise to move to a new, agile way of working. BMJ fully virtualised its infrastructure and automated its release cycle using Datapipe’s private cloud environment. Now, it has implemented a hybrid multi-cloud solution using both AWS and Alibaba Cloud, fully realising the strategy it started working towards two years ago.

“It is exciting to be working with a company that has both a long, distinguished history and is also forward-thinking in embracing the cloud,” said Tony Connor, Head of EMEA Marketing for Datapipe. “Datapipe partnered with Alibaba Cloud last year in order to better support our clients’ global growth both in and out of China. We are delighted to continue to deliver for BMJ, building upon our private cloud foundations, and taking them to China with public cloud infrastructure through our relationship with AliCloud.”

Alex Hooper, Head of Operations, BMJ says: “We have now fully realised the strategy that we first mapped out two years ago, when we started our cloud journey. In the first year, we were able to fully virtualise our infrastructure using Datapipe’s private cloud, and in the process, move to a new, agile way of working. In this second year, we have embraced public cloud and taken our services over to China.”

Alex Hooper, explains further: “Previously, we could only offer stand-alone software products in China, which are delivered on physical media and require quarterly updates to be installed by the end-user. With Datapipe’s help, we now have the capability to offer BMJ’s cloud-based services to Chinese businesses.”

This has been made possible by utilising data centres located in China, using Alibaba Cloud, which links to BMJ’s core infrastructure and gives BMJ all the benefits of public cloud infrastructure, but located within China to satisfy the requirements of the Chinese authorities.

Alex Hooper continues, “With Datapipe’s help, it was surprisingly easy to run our services in China and link them to our core infrastructure here in the UK, Datapipe has done an exemplary job.”

BMJ has seen extraordinary change in its time. Within its recent history, it has transitioned from traditional print media to becoming a digital content provider. With Datapipe’s help it now has the infrastructure and culture in place to cement its position as a premier global digital publisher and educator.

ARM has been selected for the Westconnex road infrastructure project.

Sword Active Risk, a supplier of specialist risk management software and services, has been selected by Sydney Motorway Corporation (SMC) to manage Risk, Audit and Compliance for its Sydney road infrastructure project, WestConnex. The Active Risk Manager (ARM) Software-as-a-Service solution will be delivered via Amazon Web Services and will be rolled-out across the three different disciplines in a phased implementation. ARM was selected after a formal competitive tender process and will replace the current risk management tools within the project.Darren Gustard, Chief Audit and Risk Executive of Sydney Motorway Corporation said; “After a rigorous competitive tender process Active Risk Manager from Sword Active Risk was selected as the best match for our requirements. Additionally we were impressed with ARM’s strong track record for managing risk within globally renowned infrastructure mega-projects.”

Keith Ricketts, Vice President of Marketing at Sword Active Risk commented; “Increasingly we are seeing forward-thinking organizations like Sydney Motorway Corporation moving towards a more integrated and holistic approach to risk management. The ARM SaaS delivery model is enabling organizations to implement in a fraction of the time of on-premise solutions.”

“With fewer operational overheads, reduced IT dependence and a move from capital expenditure to operational expenditure, more and more risk-aware businesses are looking to underpin successful project management, audit and compliance with a SaaS based risk solution that gives a much faster ‘Time to Value’.” Continued, Ricketts.

Active Risk Manager is used in mega-projects around the world including Crossrail, Downer Rail, Northern Gateway Alliance and Thames Tunnel.

Kela is the Finnish government agency responsible for the distribution of social security benefits, including pensions, sickness and housing benefits, and health insurance. Kela manages around 40 different benefits payments and distributes approximately €14 billion to Finnish citizens annually.

Kela’s mainframe, which comprises the core of its IT infrastructure, is one of the oldest andlargest in the Nordic region and has been in use for decades. But while its mainframe has served the organisation well over the years, Kela started to recognise that it could not support its long-term ambitions for two main reasons. The first related to maintenance burden of the mainframe itself, which was becoming increasingly difficult and costly to keep running. The second related to Kela’s digital ambitions, which were being hamstrung by its mainframe.

Markku Suominen, ICT Director at Kela, discusses the challenges of using this technology: “Mainframes are very big and expensive to run, costing us about €8 million per year and we recognised that those costs would only increase. There is now a severe shortage of personnel in Finland that are trained to maintain mainframes, because the technology is now so outdated that students no longer learn how to work with them. At the same time, we are trying develop our online and digital offering to be able to provide best-in-class services to the Finnish public, but found that we were unable to do so due to the inflexible nature of our infrastructure.”

However, while the organisation could have rewritten the apps on its mainframe and develop a completely new environment, this was not a viable option. Markku explains: “The programmes and databases on our mainframes use over 10 million lines of PL/1 code. We do not have the resources to edit this quantity of programming, and we also knew that asking our talented coders to rewrite millions of lines of code would be very demotivating for them. We needed a new environment that would enable us to leverage the skills of our staff and maintain our existing applications, which is why we decided to simply re-host the mainframe with TmaxSoft.”

Big Data, IoT and AI - the holy tech trinity - is powering the exponential boom of technology in today's society. Yet businesses are only just scratching the surface when it comes to getting the most out of this ecosystem. One of the biggest obstacles to wider adoption of this ecosystem is a lack of understanding of what each technology does and how they connect to make a bigger picture.

By Chris Proctor, CEO of Oneserve.

Take Big Data and IoT. Thanks to the rise of the Internet of Things (IoT), an abundance of data from every possible industry imaginable is available to be harvested and analysed. According to data software giant SAS, in 2012 the amount of big data stored across the world exceeded 2.8 zetabytes; this is predicted to be 50 times larger by 2020. However, globally, we only analyse approximately 0.5% of this data. Getting the most out of data remains the biggest challenge facing business leaders today.

We’ve toyed with the potential of AI for decades but only recently have the possibilities of its real-world application been realised. Deep Machine Learning, the most advanced form of AI currently, has been the key to analysing the huge amounts of data provided by the IoT and applying it to practical tasks. From filtering our email inbox, to route navigation software, and even mastering hugely complex board games, AI is the final piece of the jigsaw, uniting these three exciting technology innovations.

Another reason why businesses are being timid with integrating IoT, AI and Big Data technology into their companies is because of initial outlay. Investing in items such as RFID enabled sensors, mobile hardware and a full IoT data analytics suite can be costly. However, they have the potential to bring significant cost savings in the long-term, offering a highly effective ROI solution.

For example, an entire business can be connected from the production line to the field and with AI enabled analysis of its data, new insight becomes available which can be used to transform processes. From here the possibilities are endless.

With Big Data, IoT and AI working seamlessly together, solutions to asset management, remote monitoring, predictive maintenance, customer insight, workflow and a wealth of other processes key to any business can be gained at an unprecedented level for the first time.

Within manufacturing, this could translate to having sensors on machinery that send data to the central AI system which in turn could be able to predict when the machine is about to break. This allows maintenance to be predictive rather than reactive. With reactive maintenance, emergency repairs can cost 3 to 9 times more than planned repairs. The cost of shipping spare parts and machine down-time during production can result in down time costs of up to £18,000 per machine in some work environments. Further, full data analytics can inform actionable KPIs and event data capture, reducing problem solving time and even the possibility of automating processes.

This is a reality right now. Rolls Royce, utilising Microsoft’s Azure IoT Suite, is now using smart sensors installed in their jet engines to enable engineers to get immediate operating data. Maintenance expenditure in aviation is a major bugbear, with engines being the highest cost item. The fuel cost, per hour, for one jet engine on a Boeing 747-400 during a transatlantic flight in 2015, was as much as $3,500 per hour. Through intelligent use of AI enabled software that uses big data to control engine management and maintenance hardware, they are able to reduce the cost associated with ground maintenance and disruption significantly.

Detailed and personalised insight into customers is another major advantage of harnessing these three technologies. With the democratisation of technology and increased competition, customer experience is vital today more than ever - bad customer experience is no longer excusable. Now, businesses no longer need to guess when targeting consumers or when marketing.

Fitness First are using wearable technology combined with Apple’s iBeacon protocol to enable targeted fitness related content to be sent to customers when they enter the gym. This could be fitness regimes tailored to the individual based on data gathered from their wearable, or any content to help them with their personal fitness. This level of personalisation and detailed insight, which improves customer satisfaction, is possible only through the combination of IoT, AI and big data.

Here is indeed, the holy trinity – large amounts of data, that can now be analysed in real-time and applied practically, and through devices permanently tuned into the data. Oh, did I also mention that scalability is built-in?

Smart Cities sound like a great idea -- on paper. But the problem is that most of the people working on creating them are focusing on individual elements of city problems and developing technology-based solutions to address them.

By Megan Goodwin, Joint Managing Director, IRM.

But cities are human hives, not ‘machines for living’ (to paraphrase Le Corbusier). People have to live in them and this careful balance between increased urbanisation (United Nations have warned that 65% of humans will live in a city by 2050) and the social isolation that technology can create means that any design for a Smart City that fails to take into account human behaviour is doomed to fail.

Every conference I’ve attended over the last few months has centred on the technological infrastructure of Smart Cities: sustainability, CO2 emissions, congestion, parking, driverless cars, city lights, energy, data integration, and so on and so forth.

While, of course, they were all very interesting and valid discussions delivered by people who are experts in their field, I can’t help but feel that these meetings are missing a key factor -- the human/psychological element of Smart Cities. After all, the whole point behind Smart Cities must be about creating an efficient, sustainable, healthy space where people are happy and involved in their city because their environment is only going to become more and more densely populated. The density is not what causes the problem, according to the UN, but the planning of infrastructure.

Cities are getting bigger. The United Nations predicts that by 2030, 662 cities around the world will have at least one million residents, up from 512 in 2016. But as cities grow, so does the tendency for people to become more socially isolated.

Technology, which should be a facilitator of communications and community, is compounding the problem for far too many -- social media, for example, can increase and reinforce feelings of worthlessness, cyberbullying is on the rise and ‘fake news’ being transmitted on a global scale is undermining people’s trust in big brands, organisations and government bodies.

Are we surprised? We don’t pop down to the high street to do our shopping anymore -- we shop online, pay digitally and have it delivered… It was bad enough when the car became ubiquitous and we all drove everywhere -- but at least we left the house. Smart Cities mustn’t be allowed to create a nation of ‘shut ins’.

Instead, technology should be incorporated into city design in a way that makes it easier for us to tackle issues such as loneliness, mental health problems, personal security, eating disorders and drug addiction.

It might be less sexy as a political headline, but how can we use Big Data, technology and start-ups to address these problems should be a key question in any plans for a Smart City.

Let’s use technology to make people happier, encourage them to become healthier and create social structures that support those who need support. Technology should be about transforming people’s lives for the better, not reducing them to bytes of data in the interests of ‘efficiency’.

Smart city advisory committees are made up of everyone from anthropologists to developers, accountants to designers, but where are the psychologists? Where are the poets? Where are the game makers and the game changers? Where does the human angle and understanding coming from?

Technology is literally an enabler; it can do whatever you want it to do. So why aren’t cities using it as a way to get people to connect and to take positive steps to improve their lives?

There is some hope for the future of Smart Cities, though: Millennials have a totally different attitude to life than the generations that came before them. These citizens value sharing, spontaneity, meaningful experiences and collaboration. And that’s exactly why ‘sharing economy’ pioneers like Airbnb and Uber are leading the charge.

Smart technology needs to be about connecting people - bringing people together and giving them a feeling of community. A giant leap towards this has been taken by AccorHotel with the launch of its Jo&Joe brand - disrupting the traditional hotel format with more flexible space and designing community hubs for both local residents (dubbed “Townsters”) as well as hotel guests/travellers (“Tripsters”) so encouraging integration which appeals to the Millennial generation.

Getting people involved in the vision of the smart city is key. UN-Habitat is already making waves in this area through its Block by Block project with Minecraft, which uses the world-building computer game as a participation tool for local communities to design their own public spaces. What’s great about this is that after building projects in Minecraft, presentations are put forward to stakeholders from local government, the mayor’s office, planners and architects for future urban design.

Having worked in the games industry myself for more than 15 years, I’ve seen first hand how technology can be used as an enabler to bring people together, as well as isolate them. For Smart Cities to be truly “smart”, the integration of human needs is a fundamental requirement for any Smart City planning.

The digitisation of business has put IT departments under pressure - and it’s continuing to rise. In the past few years, the Internet of Things (IoT), big data, and mobile applications have all gone through a huge growth spurt. Coupled with dynamic business needs and user requirements for fast, anytime, anywhere access, the demands on legacy data centres are at an all-time high.

The pace of technology is speeding up. The ability to deliver applications faster while building a solid cloud strategy is no longer a nice-to-have, but a must-have for modern businesses looking to stay competitive while meeting customer needs.

The fast pace of digital business spurred organisations around the world to embrace compute virtualisation, which has transformed the data centre over the past decade.

But that transformation isn’t complete. Many IT teams still rely on hardware-centric approaches to storage and networking, which are expensive and time-consuming to manage and maintain, and don’t provide the flexibility and agility that today’s users demand.

In an era where speed and performance are critical, organizations need a more agile and flexible approach to IT infrastructure if they want to keep pace.

According to Gartner, the hyper-converged integrated system (HCIS) market is projected to surpass $10 billion in revenue by 2021, with a compound annual growth rate of 48% (from 2016 through 2021).

Hyper-converged infrastructure (HCI) collapses compute, management, and storage onto industry-standard x86 servers, enabling a building-block approach to the Software-Defined Data Centre (SDDC). With HCI, all key data centre functions run as software on the hypervisor in a tightly integrated software layer.

HCI makes it easy to transition from physical storage solutions into virtualised storage and realise big improvements fast.

As Duncan Epping, Chief Technologist, VMware – Storage & Availability, explains: “Virtualisation of compute has brought customers agility and flexibility in terms of deploying new workloads. However, when a change is required from a networking or storage perspective, a multiple week change request is not uncommon. This is where network and storage virtualization comes in to play. The idea is to provide similar agility and flexibility to the network and storage layer as was introduced for compute over a decade ago.”

As businesses address the need to create a more agile, dynamic data centre environment, they need to consider a number of important changes, including a spike in all-Flash adoption, a continuing shift towards DevOps, the maturing of the ‘Software-Defined Everything era, cross-Cloud expansion and the development of increasingly advanced automation solutions.

Leading hyper-converged infrastructure solutions are designed on a tightly integrated software stack, which benefits IT organizations with common workflows and increased automation that helps reduce operational tasks and improves responsiveness to these demands.

Duncan explains: “At VMware we took a different approach to HCI than most other vendors - for us storage became an integral part of the compute layer. Embedded in the hypervisor, a natural extension of what virtualisation administrators were already familiar with: vSphere. This is also where in my opinion the solution stands or falls. Simply adding a storage appliance on top of a hypervisor does not necessarily reduce complexity or provide flexibility.”

Duncan continues: “The implementation of the specific HCI solution can be a pitfall. How is the solution managed? Do you have a separate management interface for the storage component and the hypervisor? Are you still creating NFS mounts or iSCSI targets? HCI is about collapsing layers, converging different components in to one. This also imposes some challenges however from an organizational perspective. Who owns which part of the HCI stack? An important question, as the traditional IT team org chart (compute, storage, networking) most likely will not make sense in a hyper-converged world.”

Duncan adds: “Hyper-Converged Infrastructure is the building block for the Software Defined Data Centre. It provides flexibility, agility and speed at a lower cost both from a capital expenditure and operational expenditure point of view. Especially in a world where “time to market” is crucial for new products and solutions to stay ahead of the competition, having a flexible architecture which allows you to scale up and out at any time is important.”

Ready or not, changes are coming to your data centre. If you want to stay ahead, it’s important to start preparing right now. The right HCI solution will not only help you modernize your data centre and become more agile - it will also help you respond to new DevOps needs and lay a path to the cloud.

For example, when Discovery, a shared value insurance company and authorised financial services provider, based in South Africa, discovered that its infrastructure dependencies were affecting the stability of its VMware environment, it turned to VMware’s HCI solution powered by VMware vSAN to alleviate these dependencies and better architect its virtualised environment.

“After encountering a series of infrastructure outages that we identified were due to hardware instability, we embarked on a process to identify and find better technologies to help improve our physical and virtual infrastructure stack,” states Johan Marais, Virtualisation Manager at Discovery.

“What we uncovered was that, when there was instability across the traditional server, storage or SAN environment our VMware ecosystem would simply not be available. When this happened we firstly had no control, and secondly it was difficult to determine the root cause of problems due to the complexity of the integrated environments managed within the respective silos,” he adds.

Today 50% of the head office environment has been migrated, and Discovery’s US and UK operations are now 100% operational on vSAN. The South African environment will be migrated over a three-year period due to the size of the SAN footprint and the financial impact of changing equipment before the end of its lease period.

When Discovery’s Corporate IT discovered that its infrastructure dependencies were affecting the stability of its VMware environment, it turned to VMware’s vSAN solution to alleviate these dependencies and better architect its virtualized environment.

We’ve discovered how HCI solutions can make your IT organization more agile and responsive, helping you succeed in the digital era - but not all HCI solutions are created equal.

Look for these four characteristics:

A proven hypervisor. The hypervisor is the “hyper” in hyper-converged, and it runs all key data centre functions - compute, storage, storage networking, and management - as software. The result is more efficient operations, streamlined and speedy provisioning, and cost-effective growth.

Software-defined storage. In a good hyper-converged solution, storage and storage networking are collapsed into the server and virtualised. This streamlines operations, costs, and overall physical footprint.

A unified management platform. A unified platform that allows you to manage the entire software stack from one interface and seamlessly integrates all your workflows is a key element of a good HCI solution.

Flexible deployment choices. The ability to use low-cost industry-standard hardware is a huge advantage of HCI. With it, you no longer have to deploy expensive servers, storage networking, and external storage solutions. With an HCI platform that gives you a broad choice of hardware options, you can build an environment that matches your needs and preferences.

One of the advantages of deploying a vSAN solution is that it speeds up the time required to implement an HCI solution. As Duncan explains: “vSAN enables customers to design and deploy their infrastructure in a building block fashion. This means that storage and compute capacity can simply be added when required by adding new servers to the existing cluster. “

Duncan continues: “These servers can be, what we refer to as, vSAN Ready Node servers, or even any server on the vSphere compatibility guide with vSAN certified components. This allows customers to avoid unnecessary operational changes and challenges when it comes to deployment of hardware and firmware management, as existing management and automation tools can be leveraged.”

A further advantage of the vSAN solution is the speed of time it takes to deploy applications on the hyper-converged infrastructure. “With vSAN, customers have the ability to select components from a broad (vSAN) compatibility guide,” says Duncan. “VMware is the first in the market to support new technologies like NVMe and Intel Optane - allowing for architectures that provide high uptime and a great user experience (low latency, high IOPS). This results in fast deployment times of new applications and great, but more important consistent, performance for existing applications running on vSAN.”

And, of course, for end users already familiar with VMware’s vSphere technology, it’s relatively easy to get to grips with the vSAN product.

Finally, VMware HCI powered by vSAN offers the potential for significant capital investment savings. By leveraging standard x86 hardware instead of traditional dedicated proprietary storage hardware, acquisition cost savings of up to 60% are not uncommon. vSAN shares the same platform as vSphere, ultimately driving efficiency of resources.

For Discovery, implementation of the VMware’s vSAN solution has provided plenty of benefits.

“The vSAN platform has given us greater control of our infrastructure stack as well as improved management of the VMware environment. In addition, it has also provided greater performance and simplification of the SAN infrastructure, afforded us freedom when scaling the infrastructure as well as flexibility in our choice of hardware,” states Marais.

Marais continues: “vSAN has given us fantastic visibility into our storage IO patterns for every application and this management function now sits in the virtualisation team. What also makes a huge difference is that we are experiencing space savings because vSAN is thin provisioned; this assists us greatly in keeping the storage footprint smaller as well as reducing the unit cost of supplying a virtual server.”

Right now, HCI is a priority for nearly every IT organization because of the imperative to deliver “digital first” strategies that drive competitive advantage. Companies that want to win the fight for customers know they need tools that make them more agile, cost effective, and efficient. HCI delivers on each of these attributes and provides a valuable new tool for the IT arsenal.

Learn how VMware vSAN and Intel partner for a complete HCI solution.

DCS talks to FibreFab Marketing Manager, Gary Mitchell – covering the company’s extensive product portfolio, its successful development to date, and plans for the future.

1. Please can you provide a brief introduction to FibreFab – how long the company’s been around, its technology focus and the like?

FibreFab was founded in Milton Keynes, UK way back in 1992. We remained the industries best kept secret, OEM manufacturing for some of the biggest brands in the market place and providing many distributors with plain label or own brand fibre and copper connectivity products. Then in 2013, AFL, a division of Fujikura, acquired FibreFab and quickly realised that our engineering capabilities, solution set, supply chain, customer service and commercial relations were destined for far greater things.

Now, our vision is to be an innovative connectivity solutions company selected by Partners worldwide. We are embarking on a global mission to bring performance, innovation, fast-turnaround and real value (economic, and operational) to the market through dependable distribution and installation partners, to our customers across the globe.

2. And who are the key personnel involved?

Collaboration is one of our core values and we are firm believers that exceptional ideas stem from shared social capital between us, our partners and our customers.

FibreFab is a big family, and our distribution and installation partners are an extension of us. It is clichéd to say, but truly our people and our partners are fundamental to the success of our business.

3. And what have been the major milestones to date?

2017 – Release of FibreFab MPO connector

2013 – AFL acquires FibreFab

2009 – Setup FibreFab sales office in China to service APAC regions

2007 – Setup FibreFab sales office in the Dubai to service Middle East

2006 – Acquires UK fibre termination facility to enable fast-turnaround multi-fibre assemblies into UK and Europe

1992 – FibreFab established

4. Please can you give us a brief product portfolio overview?

We offer end to end fibre and copper network solutions. Our product portfolio consists of assemblies with single or multiple connectors, bulk cable, protection and management, as well as tools, test and termination equipment across fibre and copper.

5. In more detail, can you talk us through the fibre product line?

Working alongside our family of companies we are one of the only companies that can really offer an end to end fibre network solution. Starting with Fujikura (our Grandparent company) we have access to their world-leading fusion splicers – one of their latest innovations is the Fujikura 70R – this fusion splicer can splice 12 fibres at a time. You also have the Fujikura Wrapping Tube Cable with SpiderWeb Ribbon - that is a real game changer. Some data centres are redesigning their fibre backbone and OSP cable specs on the back of this cable. It is available from 144 fibres all the way to 3456 fibres, and generally speaking has a smaller cable diameter by 30-40%, and about 30% lighter than similar fibre counts in other cable constructions. The fibres are intermittently bonded in 12 fibre ribbons so you can multi-fusion splice, or separate one of the fibres and individually splice. One of our customers saw this cable and asked us to design a splice cabinet that would fit on a 600mm x 600m floor tile and hold 10,368 fibres. So we did it, it’s easy to use, easy to install, easy to manage, the customer told reported back that they had about an 80% reduction in time from single fibre splicing.

AFL our parent company –are well known for their test and measurement equipment, fibre cable, and network cleaning products. It is great for us to leverage their solutions and innovations and include it as part of what we offer.

Within FibreFab, we design and manufacture every manner of fibre assemblies including MPO trunks, QSFP assemblies, multi-fibre pre-terms, ultra-high density modules, patch leads and pigtails.

Fibre Management products include 144 fibre Ultra High Density (UHD) Panels, chassis for UHD modules (capable of 288 fibres in 2U), BASE8 transition modules, ODFs, racks, cabinets, sliding panels, pivot Panels, OSP enclosures, and a whole array of cable management products.

Then we have bulk cable, so we have CPR Rated loose tube, tight buffered and steel tape armour cable, as well as access to Fujikura SpiderWeb® Ribbon Cable.

It is fair to say we have a very broad fibre solution offering, ensuring we can put together a custom network solution that meet our customers requirements! Also splice protectors, can’t forget about the splice protectors.

6. And you also manufacture a comparable copper product line?

Our copper range covers high performance CAT5e, CAT6 and CAT6a, and we have everything you would expect including bulk cable, keystone jacks, patch cords, pre-terminated assemblies, and every manner of copper panel – but then we also offer CleanPatch which places 12 copper patchcords into one module which you can connect easily into the front of any patch panel or blade server. It’s a really easy, useful system and makes large copper installations even quicker and easier.

7. Do you have any thoughts on how fibre and copper solutions will develop in the coming years, bearing in mind the demand for every increasing ‘feeds and speeds’?

In terms of technology and bandwidth we believe copper is more or less at its final destination – that said there is an ongoing extension of applications for twisted pair copper in the enterprise space. With POE, POAP, VOIP and connected security devices.

In the data centre space, all main working connections are converting to higher bandwidth with all equipment now shipping with pluggable interfaces. Short range connections inside the rack continue to be made with Direct Attach Copper (DAC) - a high performance variant that does not use keystone jacks, instead opting for transceiver plugs. Active Optical Cables (AOCs) are being utilised widely for mid-range and pluggable transceivers for long range. As bandwidth demand increases over the next 5 years, DAC will become far more restricted in its applications, with AOC picking up in its space.

In mega dcs/cloud data centres most connectivity is already on single mode fibre, whilst enterprise, edge and some mid-scale dc networks use multimode. Multimode we believe will have one more generation before it slowly becomes redundant in future networks. OM5 has a very limited shelf life and is almost already irrelevant. Our advice, use single mode, install single mode and your network infrastructure will have the capability to reach 400gb/s and beyond, in fact single mode cabling will be able to support 1.2tb/s at short range.

8. FibreFab offers a variety of optical networking solutions, starting with the Optronics line?

OPTRONICS® is the name of the network system that we manufacture. Application wise we have network systems available for data centres, telecom networks and enterprise.

Through OPTRONICS®, we design and manufacture pioneering network connectivity solutions that maximize space, expand capacity, deploy quickly, migrate easily and offer fantastic economy. OPTRONICS® is a comprehensive platform of fibre and copper connectivity solutions aimed at enabling network evolution better than anybody else.

9. And the company also offers UHD solutions?

UHD stands for Ultra High Density, and it is really our jewel in the crown. At the heart of the OPTRONICS® UHD solution is our 1U, 2U and ZeroU chassis. Each designed to accept the UHD Modules or UHD Adaptor Plates. The modules are easy to install and allow your network to pay as you grow. UHD Modules can be connected by MPO, direct termination (LC or SC) or by splicing (LC or SC). Across a 2U chassis, fully populated with UHD modules, which would enable access to 288 LC ports.

The OPTRONICS® UHD Solution can also interconnect with our host of customizable, high performance MPO, multi-fibre pre-terms and range of high performance patch cords.

10. And hyperscale solutions?

FibreFab is a member of the AFL IG Group. The Hyperscale (AKA Cloud) computing providers are a major focus of the Group. We provide engineered and customised optical fibre cabling and bespoke fibre management solutions in high volume to this segment.

11. And what can you tell us about the pre-terminated solutions?